zstd

Zstandard - Fast real-time compression algorithm

OTHER License

Bot releases are visible (Hide)

This release highlights the deployment of Google Chrome 123, introducing zstd-encoding for Web traffic, introduced as a preferable option for compression of dynamic contents. With limited web server support for zstd-encoding due to its novelty, we are launching an updated Zstandard version to facilitate broader adoption.

New stable parameter ZSTD_c_targetCBlockSize

Using zstd compression for large documents over the Internet, data is segmented into smaller blocks of up to 128 KB, for incremental updates. This is crucial for applications like Chrome that process parts of documents as they arrive. However, on slow or congested networks, there can be some brief unresponsiveness in the middle of a block transmission, delaying update. To mitigate such scenarios, libzstd introduces the new parameter ZSTD_c_targetCBlockSize, enabling the division of blocks into even smaller segments to enhance initial byte delivery speed. Activating this feature incurs a cost, both runtime (equivalent to -2% speed at level 8) and a slight compression efficiency decrease (<0.1%), but offers some interesting latency reduction, notably beneficial in areas with less powerful network infrastructure.

Granular binary size selection

libzstd provides build customization, including options to compile only the compression or decompression modules, minimizing binary size. Enhanced in v1.5.6 (source), it now allows for even finer control by enabling selective inclusion or exclusion of specific components within these modules. This advancement aids applications needing precise binary size management.

Miscellaneous Enhancements

This release includes various minor enhancements and bug fixes to enhance user experience. Key updates include an expanded list of recognized compressed file suffixes for the --exclude-compressed flag, improving efficiency by skipping presumed incompressible content. Furthermore, compatibility has been broadened to include additional chipsets (sparc64, ARM64EC, risc-v) and operating systems (QNX, AIX, Solaris, HP-UX).

Change Log

api: Promote ZSTD_c_targetCBlockSize to Stable API by @felixhandte

api: new experimental ZSTD_d_maxBlockSize parameter, to reduce streaming decompression memory, by @terrelln

perf: improve performance of param ZSTD_c_targetCBlockSize, by @Cyan4973

perf: improved compression of arrays of integers at high compression, by @Cyan4973

lib: reduce binary size with selective built-time exclusion, by @felixhandte

lib: improved huffman speed on small data and linux kernel, by @terrelln

lib: accept dictionaries with partial literal tables, by @terrelln

lib: fix CCtx size estimation with external sequence producer, by @embg

lib: fix corner case decoder behaviors, by @Cyan4973 and @aimuz

lib: fix zdict prototype mismatch in static_only mode, by @ldv-alt

lib: fix several bugs in magicless-format decoding, by @embg

cli: add common compressed file types to --exclude-compressed by @daniellerozenblit (requested by @dcog989)

cli: fix mixing -c and -o commands with --rm, by @Cyan4973

cli: fix erroneous exclusion of hidden files with --output-dir-mirror by @felixhandte

cli: improved time accuracy on BSD, by @felixhandte

cli: better errors on argument parsing, by @KapJI

tests: better compatibility with older versions of grep, by @Cyan4973

tests: lorem ipsum generator as default content generator, by @Cyan4973

build: cmake improvements by @terrelln, @sighingnow, @gjasny, @JohanMabille, @Saverio976, @gruenich, @teo-tsirpanis

build: bazel support, by @jondo2010

build: fix cross-compiling for AArch64 with lld by @jcelerier

build: fix Apple platform compatibility, by @nidhijaju

build: fix Visual 2012 and lower compatibility, by @Cyan4973

build: improve win32 support, by @DimitriPapadopoulos

build: better C90 compliance for zlibWrapper, by @emaste

port: make: fat binaries on macos, by @mredig

port: ARM64EC compatibility for Windows, by @dunhor

port: QNX support by @klausholstjacobsen

port: MSYS2 and Cygwin makefile installation and test support, by @QBos07

port: risc-v support validation in CI, by @Cyan4973

port: sparc64 support validation in CI, by @Cyan4973

port: AIX compatibility, by @likema

port: HP-UX compatibility, by @likema

doc: Improved specification accuracy, by @elasota

bug: Fix and deprecate ZSTD_generateSequences (#3981), by @terrelln

Full change list (auto-generated)

- Add win32 to windows-artifacts.yml by @Kim-SSi in https://github.com/facebook/zstd/pull/3600

- Fix mmap-dict help output by @daniellerozenblit in https://github.com/facebook/zstd/pull/3601

- [oss-fuzz] Fix simple_round_trip fuzzer with overlapping decompression by @terrelln in https://github.com/facebook/zstd/pull/3612

- Reduce streaming decompression memory by (128KB - blockSizeMax) by @terrelln in https://github.com/facebook/zstd/pull/3616

- removed travis & appveyor scripts by @Cyan4973 in https://github.com/facebook/zstd/pull/3621

- Add ZSTD_d_maxBlockSize parameter by @terrelln in https://github.com/facebook/zstd/pull/3617

- [doc] add decoder errata paragraph by @Cyan4973 in https://github.com/facebook/zstd/pull/3620

- add makefile entry to build fat binary on macos by @mredig in https://github.com/facebook/zstd/pull/3614

- Disable unused variable warning in msan configurations by @danlark1 in https://github.com/facebook/zstd/pull/3624

https://github.com/facebook/zstd/pull/3634 - Allow Build-Time Exclusion of Individual Compression Strategies by @felixhandte in https://github.com/facebook/zstd/pull/3623

- Get zstd working with ARM64EC on Windows by @dunhor in https://github.com/facebook/zstd/pull/3636

- minor : update streaming_compression example by @Cyan4973 in https://github.com/facebook/zstd/pull/3631

- Fix UBSAN issue (zero addition to NULL) by @terrelln in https://github.com/facebook/zstd/pull/3658

- Add options in Makefile to cmake by @sighingnow in https://github.com/facebook/zstd/pull/3657

- fix a minor inefficiency in compress_superblock by @Cyan4973 in https://github.com/facebook/zstd/pull/3668

- Fixed a bug in the educational decoder by @Cyan4973 in https://github.com/facebook/zstd/pull/3659

- changed LLU suffix into ULL for Visual 2012 and lower by @Cyan4973 in https://github.com/facebook/zstd/pull/3664

- fixed decoder behavior when nbSeqs==0 is encoded using 2 bytes by @Cyan4973 in https://github.com/facebook/zstd/pull/3669

- detect extraneous bytes in the Sequences section by @Cyan4973 in https://github.com/facebook/zstd/pull/3674

- Bitstream produces only zeroes after an overflow event by @Cyan4973 in https://github.com/facebook/zstd/pull/3676

- Update FreeBSD CI images to latest supported releases by @emaste in https://github.com/facebook/zstd/pull/3684

- Clean up a false error message in the LDM debug log by @embg in https://github.com/facebook/zstd/pull/3686

- Hide ASM symbols on Apple platforms by @nidhijaju in https://github.com/facebook/zstd/pull/3688

- Changed the decoding loop to detect more invalid cases of corruption sooner by @Cyan4973 in https://github.com/facebook/zstd/pull/3677

- Fix Intel Xcode builds with assembly by @gjasny in https://github.com/facebook/zstd/pull/3665

- Save one byte on the frame epilogue by @Coder-256 in https://github.com/facebook/zstd/pull/3700

- Update fileio.c: fix build failure with enabled LTO by @LocutusOfBorg in https://github.com/facebook/zstd/pull/3695

- fileio_asyncio: handle malloc fails in AIO_ReadPool_create by @void0red in https://github.com/facebook/zstd/pull/3704

- Fix typographical error in README.md by @nikohoffren in https://github.com/facebook/zstd/pull/3701

- Fixed typo by @alexsifivetw in https://github.com/facebook/zstd/pull/3712

- Improve dual license wording in README by @terrelln in https://github.com/facebook/zstd/pull/3718

- Unpoison Workspace Memory Before Custom-Free by @felixhandte in https://github.com/facebook/zstd/pull/3725

- added ZSTD_decompressDCtx() benchmark option to fullbench by @Cyan4973 in https://github.com/facebook/zstd/pull/3726

- No longer reject dictionaries with literals maxSymbolValue < 255 by @terrelln in https://github.com/facebook/zstd/pull/3731

- fix: ZSTD_BUILD_DECOMPRESSION message by @0o001 in https://github.com/facebook/zstd/pull/3728

- Updated Makefiles for full MSYS2 and Cygwin installation and testing … by @QBos07 in https://github.com/facebook/zstd/pull/3720

- Work around nullptr-with-nonzero-offset warning by @terrelln in https://github.com/facebook/zstd/pull/3738

- Fix & refactor Huffman repeat tables for dictionaries by @terrelln in https://github.com/facebook/zstd/pull/3737

- zdictlib: fix prototype mismatch by @ldv-alt in https://github.com/facebook/zstd/pull/3733

- Fixed zstd cmake shared build on windows by @JohanMabille in https://github.com/facebook/zstd/pull/3739

- Added qnx in the posix test section of platform.h by @klausholstjacobsen in https://github.com/facebook/zstd/pull/3745

- added some documentation on ZSTD_estimate*Size() variants by @Cyan4973 in https://github.com/facebook/zstd/pull/3755

- Improve macro guards for ZSTD_assertValidSequence by @terrelln in https://github.com/facebook/zstd/pull/3770

- Stop suppressing pointer-overflow UBSAN errors by @terrelln in https://github.com/facebook/zstd/pull/3776

- fix x32 tests on Github CI by @Cyan4973 in https://github.com/facebook/zstd/pull/3777

- Fix new typos found by codespell by @DimitriPapadopoulos in https://github.com/facebook/zstd/pull/3771

- Do not test WIN32, instead test _WIN32 by @DimitriPapadopoulos in https://github.com/facebook/zstd/pull/3772

- Fix a very small formatting typo in the lib/README.md file by @dloidolt in https://github.com/facebook/zstd/pull/3763

- Fix pzstd Makefile to allow setting

DESTDIRandBINDIRseparately by @paulmenzel in https://github.com/facebook/zstd/pull/3752 - Remove FlexArray pattern from ZSTDMT by @Cyan4973 in https://github.com/facebook/zstd/pull/3786

- solving flexArray issue #3785 in fse by @Cyan4973 in https://github.com/facebook/zstd/pull/3789

- Add doc on how to use it with cmake FetchContent by @Saverio976 in https://github.com/facebook/zstd/pull/3795

- Correct FSE probability bit consumption in specification by @elasota in https://github.com/facebook/zstd/pull/3806

- Add Bazel module instructions to README.md by @jondo2010 in https://github.com/facebook/zstd/pull/3812

- Clarify that a stream containing too many Huffman weights is invalid by @elasota in https://github.com/facebook/zstd/pull/3813

- [cmake] Require CMake version 3.5 or newer by @gruenich in https://github.com/facebook/zstd/pull/3807

- Three fixes for the Linux kernel by @terrelln in https://github.com/facebook/zstd/pull/3822

- [huf] Improve fast huffman decoding speed in linux kernel by @terrelln in https://github.com/facebook/zstd/pull/3826

- [huf] Improve fast C & ASM performance on small data by @terrelln in https://github.com/facebook/zstd/pull/3827

- update xxhash library to v0.8.2 by @Cyan4973 in https://github.com/facebook/zstd/pull/3820

- Modernize macros to use

do { } while (0)by @terrelln in https://github.com/facebook/zstd/pull/3831 - Clarify that the presence of weight value 1 is required, and a lone implied 1 weight is invalid by @elasota in https://github.com/facebook/zstd/pull/3814

- Move offload API params into ZSTD_CCtx_params by @embg in https://github.com/facebook/zstd/pull/3839

- Update FreeBSD CI: drop 12.4 (nearly EOL) by @emaste in https://github.com/facebook/zstd/pull/3845

- Make offload API compatible with static CCtx by @embg in https://github.com/facebook/zstd/pull/3854

- zlibWrapper: convert to C89 / ANSI C by @emaste in https://github.com/facebook/zstd/pull/3846

- Fix a nullptr dereference in ZSTD_createCDict_advanced2() by @michoecho in https://github.com/facebook/zstd/pull/3847

- Cirrus-CI: Add FreeBSD 14 by @emaste in https://github.com/facebook/zstd/pull/3855

- CI: meson: use builtin handling for MSVC by @eli-schwartz in https://github.com/facebook/zstd/pull/3858

- cli: better errors on argument parsing by @KapJI in https://github.com/facebook/zstd/pull/3850

- Clarify that probability tables must not contain non-zero probabilities for invalid values by @elasota in https://github.com/facebook/zstd/pull/3817

- [x-compile] Fix cross-compiling for AArch64 with lld by @jcelerier in https://github.com/facebook/zstd/pull/3760

- playTests.sh does no longer needs grep -E by @Cyan4973 in https://github.com/facebook/zstd/pull/3865

- minor: playTests.sh more compatible with older versions of grep by @Cyan4973 in https://github.com/facebook/zstd/pull/3877

- disable Intel CET Compatibility tests by @Cyan4973 in https://github.com/facebook/zstd/pull/3884

- improve cmake test by @Cyan4973 in https://github.com/facebook/zstd/pull/3883

- add sparc64 compilation test by @Cyan4973 in https://github.com/facebook/zstd/pull/3886

- add a lorem ipsum generator by @Cyan4973 in https://github.com/facebook/zstd/pull/3890

- Update Dependency in Intel CET Test; Re-Enable Test by @felixhandte in https://github.com/facebook/zstd/pull/3893

- Improve compression of Arrays of Integers (High compression mode) by @Cyan4973 in https://github.com/facebook/zstd/pull/3895

- [Zstd] Less verbose log for patch mode. by @sandreenko in https://github.com/facebook/zstd/pull/3899

- fix 5921623844651008 by @Cyan4973 in https://github.com/facebook/zstd/pull/3900

- Fix fuzz issue 5131069967892480 by @Cyan4973 in https://github.com/facebook/zstd/pull/3902

- Advertise Availability of Security Vulnerability Notifications by @felixhandte in https://github.com/facebook/zstd/pull/3909

- updated setup-msys2 to v2.22.0 by @Cyan4973 in https://github.com/facebook/zstd/pull/3914

- Lorem Ipsum generator update by @Cyan4973 in https://github.com/facebook/zstd/pull/3913

- Reduce scope of variables by @gruenich in https://github.com/facebook/zstd/pull/3903

- Improve speed of ZSTD_c_targetCBlockSize by @Cyan4973 in https://github.com/facebook/zstd/pull/3915

- More regular block sizes with

targetCBlockSizeby @Cyan4973 in https://github.com/facebook/zstd/pull/3917 - removed sprintf usage from zstdcli.c by @Cyan4973 in https://github.com/facebook/zstd/pull/3916

- Export a

zstd::libzstdCMake target if only static or dynamic linkage is specified. by @teo-tsirpanis in https://github.com/facebook/zstd/pull/3811 - fix version of actions/checkout by @Cyan4973 in https://github.com/facebook/zstd/pull/3926

- minor Makefile refactoring by @Cyan4973 in https://github.com/facebook/zstd/pull/3753

- lib/decompress: check for reserved bit corruption in zstd by @aimuz in https://github.com/facebook/zstd/pull/3840

- Fix state table formatting by @elasota in https://github.com/facebook/zstd/pull/3816

- Specify offset 0 as invalid and specify required fixup behavior by @elasota in https://github.com/facebook/zstd/pull/3824

- update -V documentation by @Cyan4973 in https://github.com/facebook/zstd/pull/3928

- fix LLU->ULL by @Cyan4973 in https://github.com/facebook/zstd/pull/3929

- Fix building xxhash on AIX 5.1 by @likema in https://github.com/facebook/zstd/pull/3860

- Fix building on HP-UX 11.11 PA-RISC by @likema in https://github.com/facebook/zstd/pull/3862

- Fix AsyncIO reading seed queueing by @yoniko in https://github.com/facebook/zstd/pull/3940

- Use ZSTD_LEGACY_SUPPORT=5 in "make test" by @embg in https://github.com/facebook/zstd/pull/3943

- Pin sanitizer CI jobs to ubuntu-20.04 by @embg in https://github.com/facebook/zstd/pull/3945

- chore: fix some typos by @acceptacross in https://github.com/facebook/zstd/pull/3949

- new method to deal with offset==0 erroneous edge case by @Cyan4973 in https://github.com/facebook/zstd/pull/3937

- add tests inspired from #2927 by @Cyan4973 in https://github.com/facebook/zstd/pull/3948

- cmake refactor: move HP-UX specific logic into its own function by @Cyan4973 in https://github.com/facebook/zstd/pull/3946

- Fix #3719 : mixing -c, -o and --rm by @Cyan4973 in https://github.com/facebook/zstd/pull/3942

- minor: fix incorrect debug level by @Cyan4973 in https://github.com/facebook/zstd/pull/3936

- add RISC-V emulation tests to Github CI by @Cyan4973 in https://github.com/facebook/zstd/pull/3934

- prevent XXH64 from being autovectorized by XXH512 by default by @Cyan4973 in https://github.com/facebook/zstd/pull/3933

- Stop Hardcoding the POSIX Version on BSDs by @felixhandte in https://github.com/facebook/zstd/pull/3952

- Convert the CircleCI workflow to a GitHub Actions workflow by @jk0 in https://github.com/facebook/zstd/pull/3901

- Add common compressed file types to --exclude-compressed by @daniellerozenblit in https://github.com/facebook/zstd/pull/3951

- Export ZSTD_LEGACY_SUPPORT in tests/Makefile by @embg in https://github.com/facebook/zstd/pull/3955

- Exercise ZSTD_findDecompressedSize() in the simple decompression fuzzer by @embg in https://github.com/facebook/zstd/pull/3959

- Update

ZSTD_RowFindBestMatchcomment by @yoniko in https://github.com/facebook/zstd/pull/3947 - Add the zeroSeq sample by @Cyan4973 in https://github.com/facebook/zstd/pull/3954

- [cpu] Backport fix for rbx clobbering on Windows with Clang by @terrelln in https://github.com/facebook/zstd/pull/3957

- Do not truncate file name in verbose mode by @Cyan4973 in https://github.com/facebook/zstd/pull/3956

- updated documentation by @Cyan4973 in https://github.com/facebook/zstd/pull/3958

- [asm][aarch64] Mark that BTI and PAC are supported by @terrelln in https://github.com/facebook/zstd/pull/3961

- Use

utimensat()on FreeBSD by @felixhandte in https://github.com/facebook/zstd/pull/3960 - reduce the amount of #include in cover.h by @Cyan4973 in https://github.com/facebook/zstd/pull/3962

- Remove Erroneous Exclusion of Hidden Files and Folders in

--output-dir-mirrorby @felixhandte in https://github.com/facebook/zstd/pull/3963 - Promote

ZSTD_c_targetCBlockSizeParameter to Stable API by @felixhandte in https://github.com/facebook/zstd/pull/3964 - [cmake] Always create libzstd target by @terrelln in https://github.com/facebook/zstd/pull/3965

- Remove incorrect docs regarding ZSTD_findFrameCompressedSize() by @embg in https://github.com/facebook/zstd/pull/3967

- add line number to debug traces by @Cyan4973 in https://github.com/facebook/zstd/pull/3966

- bump version number by @Cyan4973 in https://github.com/facebook/zstd/pull/3969

- Export zstd's public headers via BUILD_INTERFACE by @terrelln in https://github.com/facebook/zstd/pull/3968

- Fix bug with streaming decompression of magicless format by @embg in https://github.com/facebook/zstd/pull/3971

- pzstd: use c++14 without conditions by @kanavin in https://github.com/facebook/zstd/pull/3682

- Fix bugs in simple decompression fuzzer by @yoniko in https://github.com/facebook/zstd/pull/3978

- Fuzzing and bugfixes for magicless-format decoding by @embg in https://github.com/facebook/zstd/pull/3976

- Fix & fuzz ZSTD_generateSequences by @terrelln in https://github.com/facebook/zstd/pull/3981

- Fail on errors when building fuzzers by @yoniko in https://github.com/facebook/zstd/pull/3979

- [cmake] Emit warnings for contradictory build settings by @terrelln in https://github.com/facebook/zstd/pull/3975

- Document the process for adding a new fuzzer by @embg in https://github.com/facebook/zstd/pull/3982

- Fix -Werror=pointer-arith in fuzzers by @embg in https://github.com/facebook/zstd/pull/3983

- Doc update by @Cyan4973 in https://github.com/facebook/zstd/pull/3977

- v1.5.6 by @Cyan4973 in https://github.com/facebook/zstd/pull/3984

New Contributors

- @Kim-SSi made their first contribution in https://github.com/facebook/zstd/pull/3600

- @mredig made their first contribution in https://github.com/facebook/zstd/pull/3614

- @dunhor made their first contribution in https://github.com/facebook/zstd/pull/3636

- @sighingnow made their first contribution in https://github.com/facebook/zstd/pull/3657

- @nidhijaju made their first contribution in https://github.com/facebook/zstd/pull/3688

- @gjasny made their first contribution in https://github.com/facebook/zstd/pull/3665

- @Coder-256 made their first contribution in https://github.com/facebook/zstd/pull/3700

- @LocutusOfBorg made their first contribution in https://github.com/facebook/zstd/pull/3695

- @void0red made their first contribution in https://github.com/facebook/zstd/pull/3704

- @nikohoffren made their first contribution in https://github.com/facebook/zstd/pull/3701

- @alexsifivetw made their first contribution in https://github.com/facebook/zstd/pull/3712

- @0o001 made their first contribution in https://github.com/facebook/zstd/pull/3728

- @QBos07 made their first contribution in https://github.com/facebook/zstd/pull/3720

- @JohanMabille made their first contribution in https://github.com/facebook/zstd/pull/3739

- @klausholstjacobsen made their first contribution in https://github.com/facebook/zstd/pull/3745

- @Saverio976 made their first contribution in https://github.com/facebook/zstd/pull/3795

- @elasota made their first contribution in https://github.com/facebook/zstd/pull/3806

- @jondo2010 made their first contribution in https://github.com/facebook/zstd/pull/3812

- @gruenich made their first contribution in https://github.com/facebook/zstd/pull/3807

- @michoecho made their first contribution in https://github.com/facebook/zstd/pull/3847

- @KapJI made their first contribution in https://github.com/facebook/zstd/pull/3850

- @jcelerier made their first contribution in https://github.com/facebook/zstd/pull/3760

- @sandreenko made their first contribution in https://github.com/facebook/zstd/pull/3899

- @teo-tsirpanis made their first contribution in https://github.com/facebook/zstd/pull/3811

- @aimuz made their first contribution in https://github.com/facebook/zstd/pull/3840

- @acceptacross made their first contribution in https://github.com/facebook/zstd/pull/3949

- @jk0 made their first contribution in https://github.com/facebook/zstd/pull/3901

Full Changelog: https://github.com/facebook/zstd/compare/v1.5.5...v1.5.6

Published by Cyan4973 over 1 year ago

Zstandard v1.5.5 Release Note

This is a quick fix release. The primary focus is to correct a rare corruption bug in high compression mode, detected by @danlark1 . The probability to generate such a scenario by random chance is extremely low. It evaded months of continuous fuzzer tests, due to the number and complexity of simultaneous conditions required to trigger it. Nevertheless, @danlark1 from Google shepherds such a humongous amount of data that he managed to detect a reproduction case (corruptions are detected thanks to the checksum), making it possible for @terrelln to investigate and fix the bug. Thanks !

While the probability might be very small, corruption issues are nonetheless very serious, so an update to this version is highly recommended, especially if you employ high compression modes (levels 16+).

When the issue was detected, there were a number of other improvements and minor fixes already in the making, hence they are also present in this release. Let’s detail the main ones.

Improved memory usage and speed for the --patch-from mode

V1.5.5 introduces memory-mapped dictionaries, by @daniellerozenblit, for both posix #3486 and windows #3557.

This feature allows zstd to memory-map large dictionaries, rather than requiring to load them into memory. This can make a pretty big difference for memory-constrained environments operating patches for large data sets.

It's mostly visible under memory pressure, since mmap will be able to release less-used memory and continue working.

But even when memory is plentiful, there are still measurable memory benefits, as shown in the graph below, especially when the reference turns out to be not completely relevant for the patch.

This feature is automatically enabled for --patch-from compression/decompression when the dictionary is larger than the user-set memory limit. It can also be manually enabled/disabled using --mmap-dict or --no-mmap-dict respectively.

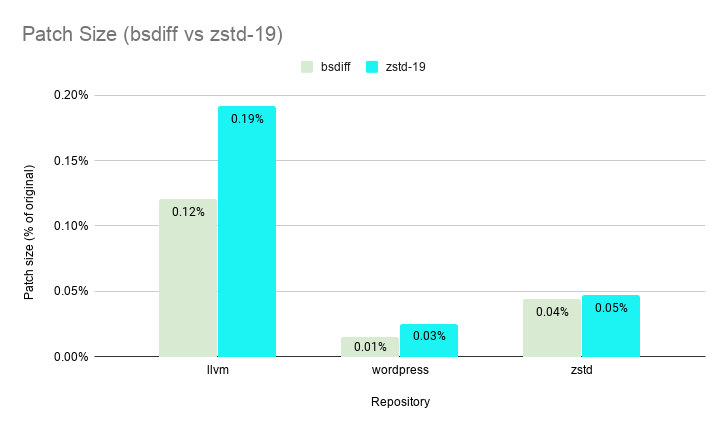

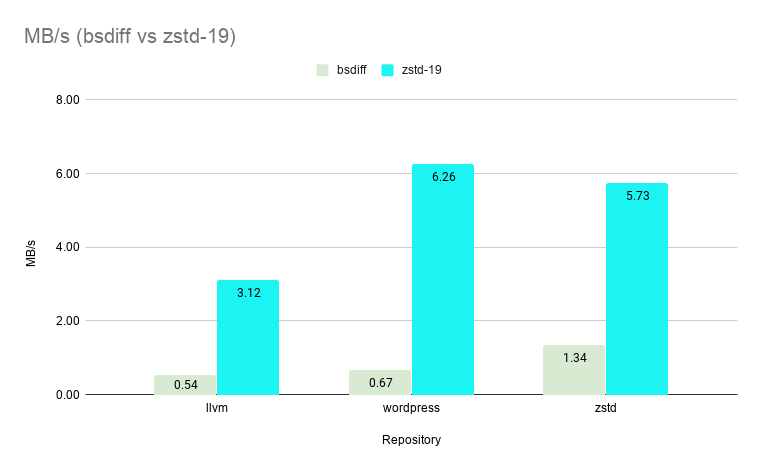

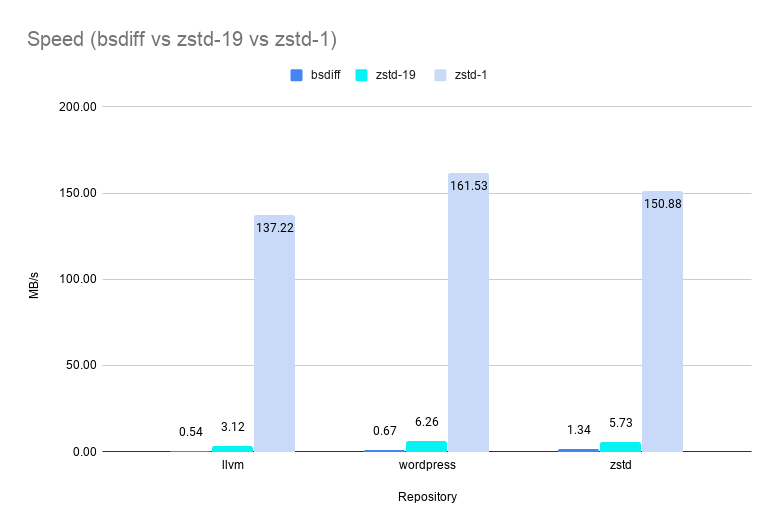

Additionally, @daniellerozenblit introduces significant speed improvements for --patch-from.

An I/O optimization in #3486 greatly improves --patch-from decompression speed on Linux, typically by +50% on large files (~1GB).

Compression speed is also taken care of, with a dictionary-indexing speed optimization introduced in #3545. It wildly accelerates --patch-from compression, typically doubling speed on large files (~1GB), sometimes even more depending on exact scenario.

This speed improvement comes at a slight regression in compression ratio, and is therefore enabled only on non-ultra compression strategies.

Speed improvements of middle-level compression for specific scenarios

The row-hash match finder introduced in version 1.5.0 for levels 5-12 has been improved in version 1.5.5, enhancing its speed in specific corner-case scenarios.

The first optimization (#3426) accelerates streaming compression using ZSTD_compressStream on small inputs by removing an expensive table initialization step. This results in remarkable speed increases for very small inputs.

The following scenario measures compression speed of ZSTD_compressStream at level 9 for different sample sizes on a linux platform running an i7-9700k cpu.

| sample size |

v1.5.4 (MB/s) |

v1.5.5 (MB/s) |

improvement |

|---|---|---|---|

| 100 | 1.4 | 44.8 | x32 |

| 200 | 2.8 | 44.9 | x16 |

| 500 | 6.5 | 60.0 | x9.2 |

| 1K | 12.4 | 70.0 | x5.6 |

| 2K | 25.0 | 111.3 | x4.4 |

| 4K | 44.4 | 139.4 | x3.2 |

| ... | ... | ... | |

| 1M | 97.5 | 99.4 | +2% |

The second optimization (#3552) speeds up compression of incompressible data by a large multiplier. This is achieved by increasing the step size and reducing the frequency of matching when no matches are found, with negligible impact on the compression ratio. It makes mid-level compression essentially inexpensive when processing incompressible data, typically, already compressed data (note: this was already the case for fast compression levels).

The following scenario measures compression speed of ZSTD_compress compiled with gcc-9 for a ~10MB incompressible sample on a linux platform running an i7-9700k cpu.

| level |

v1.5.4 (MB/s) |

v1.5.5 (MB/s) |

improvement |

|---|---|---|---|

| 3 | 3500 | 3500 | not a row-hash level (control) |

| 5 | 400 | 2500 | x6.2 |

| 7 | 380 | 2200 | x5.8 |

| 9 | 176 | 1880 | x10 |

| 11 | 67 | 1130 | x16 |

| 13 | 89 | 89 | not a row-hash level (control) |

Miscellaneous

There are other welcome speed improvements in this package.

For example, @felixhandte managed to increase processing speed of small files by carefully reducing the nb of system calls (#3479). This can easily translate into +10% speed when processing a lot of small files in batch.

The Seekable format received a bit of care. It's now much faster when splitting data into very small blocks (#3544). In an extreme scenario reported by @P-E-Meunier, it improves processing speed by x90. Even for more "common" settings, such as using 4KB blocks on some "normally" compressible data like enwik, it still provides a healthy x2 processing speed benefit. Moreover, @dloidolt merged an optimization that reduces the nb of I/O seek() events during reads (decompression), which is also beneficial for speed.

The release is not limited to speed improvements, several loose ends and corner cases were also fixed in this release. For a more detailed list of changes, please take a look at the changelog.

Change Log

- fix: fix rare corruption bug affecting the high compression mode, reported by @danlark1 (#3517, @terrelln)

- perf: improve mid-level compression speed (#3529, #3533, #3543, @yoniko and #3552, @terrelln)

- lib: deprecated bufferless block-level API (#3534) by @terrelln

- cli:

mmaplarge dictionaries to save memory, by @daniellerozenblit - cli: improve speed of

--patch-frommode (~+50%) (#3545) by @daniellerozenblit - cli: improve i/o speed (~+10%) when processing lots of small files (#3479) by @felixhandte

- cli:

zstdno longer crashes when requested to write into write-protected directory (#3541) by @felixhandte - cli: fix decompression into block device using

-o(#3584, @Cyan4973) reported by @georgmu - build: fix zstd CLI compiled with lzma support but not zlib support (#3494) by @Hello71

- build: fix

cmakedoes no longer require 3.18 as minimum version (#3510) by @kou - build: fix MSVC+ClangCL linking issue (#3569) by @tru

- build: fix zstd-dll, version of zstd CLI that links to the dynamic library (#3496) by @yoniko

- build: fix MSVC warnings (#3495) by @embg

- doc: updated zstd specification to clarify corner cases, by @Cyan4973

- doc: document how to create fat binaries for macos (#3568) by @rickmark

- misc: improve seekable format ingestion speed (~+100%) for very small chunk sizes (#3544) by @Cyan4973

- misc:

tests/fullbenchcan benchmark multiple files (#3516) by @dloidolt

Full change list (auto-generated)

- Fix all MSVC warnings by @embg in https://github.com/facebook/zstd/pull/3495

- Fix zstd-dll build missing dependencies by @yoniko in https://github.com/facebook/zstd/pull/3496

- Bump github/codeql-action from 2.2.1 to 2.2.4 by @dependabot in https://github.com/facebook/zstd/pull/3503

- Github Action to generate Win64 artifacts by @Cyan4973 in https://github.com/facebook/zstd/pull/3491

- Use correct types in LZMA comp/decomp by @Hello71 in https://github.com/facebook/zstd/pull/3497

- Make Github workflows permissions read-only by default by @yoniko in https://github.com/facebook/zstd/pull/3488

- CI Workflow for external compressors dependencies by @yoniko in https://github.com/facebook/zstd/pull/3505

- Fix cli-tests issues by @daniellerozenblit in https://github.com/facebook/zstd/pull/3509

- Fix Permissions on Publish Release Artifacts Job by @felixhandte in https://github.com/facebook/zstd/pull/3511

- Use

f-variants ofchmod()andchown()by @felixhandte in https://github.com/facebook/zstd/pull/3479 - Don't require CMake 3.18 or later by @kou in https://github.com/facebook/zstd/pull/3510

- meson: always build the zstd binary when tests are enabled by @eli-schwartz in https://github.com/facebook/zstd/pull/3490

- [bug-fix] Fix rare corruption bug affecting the block splitter by @terrelln in https://github.com/facebook/zstd/pull/3517

- Clarify zstd specification for Huffman blocks by @Cyan4973 in https://github.com/facebook/zstd/pull/3514

- Fix typos found by codespell by @DimitriPapadopoulos in https://github.com/facebook/zstd/pull/3513

- Bump github/codeql-action from 2.2.4 to 2.2.5 by @dependabot in https://github.com/facebook/zstd/pull/3518

- fullbench with two files by @dloidolt in https://github.com/facebook/zstd/pull/3516

- Add initialization of clevel to static cdict (#3525) by @yoniko in https://github.com/facebook/zstd/pull/3527

- [linux-kernel] Fix assert definition by @terrelln in https://github.com/facebook/zstd/pull/3532

- Add ZSTD_set{C,F,}Params() helper functions by @terrelln in https://github.com/facebook/zstd/pull/3530

- Clarify dstCapacity requirements by @terrelln in https://github.com/facebook/zstd/pull/3531

- Mmap large dictionaries in patch-from mode by @daniellerozenblit in https://github.com/facebook/zstd/pull/3486

- added clarifications for sizes of compressed huffman blocks and streams. by @Cyan4973 in https://github.com/facebook/zstd/pull/3538

- Simplify benchmark unit invocation API from CLI by @Cyan4973 in https://github.com/facebook/zstd/pull/3526

- Avoid Segfault Caused by Calling

setvbuf()on Null File Pointer by @felixhandte in https://github.com/facebook/zstd/pull/3541 - Pin Moar Action Dependencies by @felixhandte in https://github.com/facebook/zstd/pull/3542

- Improved seekable format ingestion speed for small frame size by @Cyan4973 in https://github.com/facebook/zstd/pull/3544

- Reduce RowHash's tag space size by x2 by @yoniko in https://github.com/facebook/zstd/pull/3543

- [Bugfix] row hash tries to match position 0 by @yoniko in https://github.com/facebook/zstd/pull/3548

- Bump github/codeql-action from 2.2.5 to 2.2.6 by @dependabot in https://github.com/facebook/zstd/pull/3549

- Add init once memory (#3528) by @yoniko in https://github.com/facebook/zstd/pull/3529

- Introduce salt into row hash (#3528 part 2) by @yoniko in https://github.com/facebook/zstd/pull/3533

- added documentation for the seekable format by @Cyan4973 in https://github.com/facebook/zstd/pull/3547

- patch-from speed optimization by @daniellerozenblit in https://github.com/facebook/zstd/pull/3545

- Deprecated bufferless and block level APIs by @terrelln in https://github.com/facebook/zstd/pull/3534

- added documentation for LDM + dictionary compatibility by @Cyan4973 in https://github.com/facebook/zstd/pull/3553

- Fix a bug in the CLI tests newline processing, then simplify it further by @ppentchev in https://github.com/facebook/zstd/pull/3559

- [lazy] Skip over incompressible data by @terrelln in https://github.com/facebook/zstd/pull/3552

- Fix patch-from speed optimization by @daniellerozenblit in https://github.com/facebook/zstd/pull/3556

- Bump actions/checkout from 3.3.0 to 3.5.0 by @dependabot in https://github.com/facebook/zstd/pull/3572

- [easy] minor doc update for --rsyncable by @Cyan4973 in https://github.com/facebook/zstd/pull/3570

- [contrib/pzstd] Select

-std=c++11When Default is Older by @felixhandte in https://github.com/facebook/zstd/pull/3574 - Add instructions for building Universal2 on macOS via CMake by @rickmark in https://github.com/facebook/zstd/pull/3568

- Provide an interface for fuzzing sequence producer plugins by @embg in https://github.com/facebook/zstd/pull/3551

- mmap for windows by @daniellerozenblit in https://github.com/facebook/zstd/pull/3557

- Bump github/codeql-action from 2.2.6 to 2.2.8 by @dependabot in https://github.com/facebook/zstd/pull/3573

- Disable linker flag detection on MSVC/ClangCL. by @tru in https://github.com/facebook/zstd/pull/3569

- Couple tweaks to improve decompression speed with clang PGO compilation by @zhuhan0 in https://github.com/facebook/zstd/pull/3576

- Increase tests timeout by @dvoropaev in https://github.com/facebook/zstd/pull/3540

- added a Clang-CL Windows test to CI by @Cyan4973 in https://github.com/facebook/zstd/pull/3579

- Seekable format read optimization by @Cyan4973 in https://github.com/facebook/zstd/pull/3581

- Check that

destis valid for decompression by @daniellerozenblit in https://github.com/facebook/zstd/pull/3555 - fix decompression with -o writing into a block device by @Cyan4973 in https://github.com/facebook/zstd/pull/3584

- updated version number to v1.5.5 by @Cyan4973 in https://github.com/facebook/zstd/pull/3577

New Contributors

- @kou made their first contribution in https://github.com/facebook/zstd/pull/3510

- @dloidolt made their first contribution in https://github.com/facebook/zstd/pull/3516

- @ppentchev made their first contribution in https://github.com/facebook/zstd/pull/3559

- @rickmark made their first contribution in https://github.com/facebook/zstd/pull/3568

- @dvoropaev made their first contribution in https://github.com/facebook/zstd/pull/3540

Full Changelog: https://github.com/facebook/zstd/compare/v1.5.4...v1.5.5

Published by Cyan4973 over 1 year ago

Zstandard v1.5.4 is a pretty big release benefiting from one year of work, spread over > 650 commits. It offers significant performance improvements across multiple scenarios, as well as new features (detailed below). There is a crop of little bug fixes too, a few ones targeting the 32-bit mode are important enough to make this release a recommended upgrade.

Various Speed improvements

This release has accumulated a number of scenario-specific improvements, that cumulatively benefit a good portion of installed base in one way or another.

Among the easier ones to describe, the repository has received several contributions for arm optimizations, notably from @JunHe77 and @danlark1. And @terrelln has improved decompression speed for non-x64 systems, including arm. The combination of this work is visible in the following example, using an M1-Pro (aarch64 architecture) :

| cpu | function | corpus | v1.5.2 |

v1.5.4 |

Improvement |

|---|---|---|---|---|---|

| M1 Pro | decompress | silesia.tar |

1370 MB/s | 1480 MB/s | + 8% |

| Galaxy S22 | decompress | silesia.tar |

1150 MB/s | 1200 MB/s | + 4% |

Middle compression levels (5-12) receive some care too, with @terrelln improving the dispatch engine, and @danlark1 offering NEON optimizations. Exact speed up vary depending on platform, cpu, compiler, and compression level, though one can expect gains ranging from +1 to +10% depending on scenarios.

| cpu | function | corpus | v1.5.2 |

v1.5.4 |

Improvement |

|---|---|---|---|---|---|

| i7-9700k | compress -6 | silesia.tar |

110 MB/s | 121 MB/s | +10% |

| Galaxy S22 | compress -6 | silesia.tar |

98 MB/s | 103 MB/s | +5% |

| M1 Pro | compress -6 | silesia.tar |

122 MB/s | 130 MB/s | +6.5% |

| i7-9700k | compress -9 | silesia.tar |

64 MB/s | 70 MB/s | +9.5% |

| Galaxy S22 | compress -9 | silesia.tar |

51 MB/s | 52 MB/s | +1% |

| M1 Pro | compress -9 | silesia.tar |

77 MB/s | 86 MB/s | +11.5% |

| i7-9700k | compress -12 | silesia.tar |

31.6 MB/s | 31.8 MB/s | +0.5% |

| Galaxy S22 | compress -12 | silesia.tar |

20.9 MB/s | 22.1 MB/s | +5% |

| M1 Pro | compress -12 | silesia.tar |

36.1 MB/s | 39.7 MB/s | +10% |

Speed of the streaming compression interface has been improved by @embg in scenarios involving large files (where size is a multiple of the windowSize parameter). The improvement is mostly perceptible at high speeds (i.e. ~level 1). In the following sample, the measurement is taken directly at ZSTD_compressStream() function call, using a dedicated benchmark tool tests/fullbench.

| cpu | function | corpus | v1.5.2 |

v1.5.4 |

Improvement |

|---|---|---|---|---|---|

| i7-9700k |

ZSTD_compressStream() -1 |

silesia.tar |

392 MB/s | 429 MB/s | +9.5% |

| Galaxy S22 |

ZSTD_compressStream() -1 |

silesia.tar |

380 MB/s | 430 MB/s | +13% |

| M1 Pro |

ZSTD_compressStream() -1 |

silesia.tar |

476 MB/s | 539 MB/s | +13% |

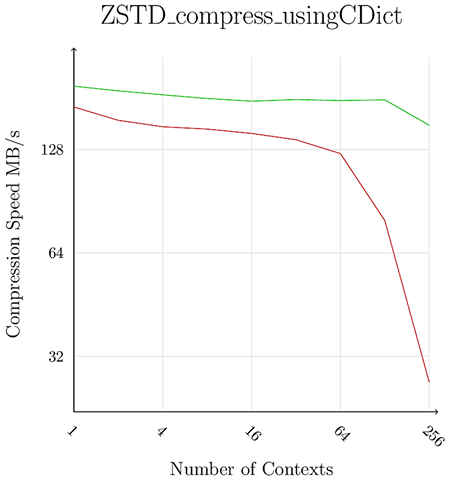

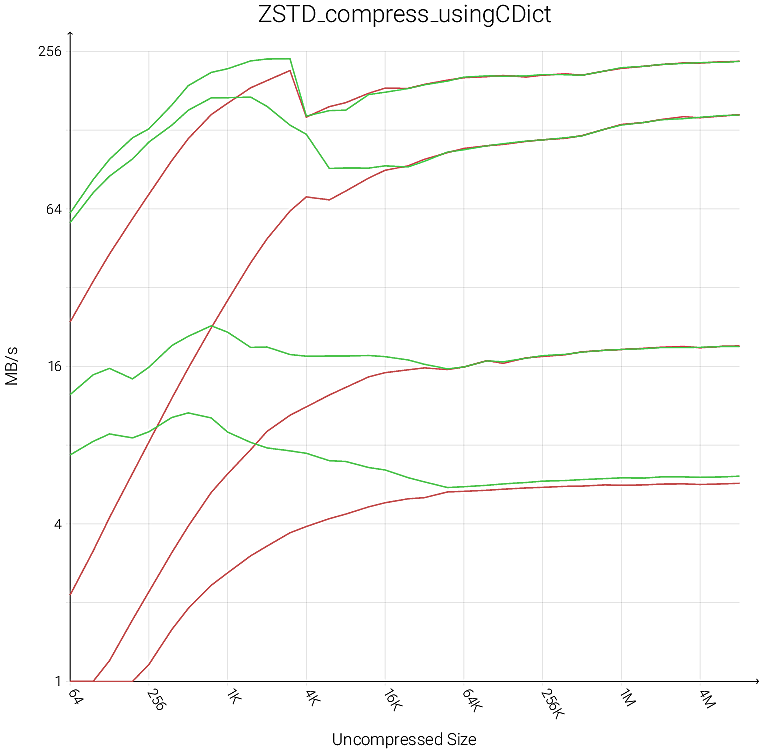

Finally, dictionary compression speed has received a good boost by @embg. Exact outcome varies depending on system and corpus. The following result is achieved by cutting the enwik8 compression corpus into 1KB blocks, generating a dictionary from these blocks, and then benchmarking the compression speed at level 1.

| cpu | function | corpus | v1.5.2 |

v1.5.4 |

Improvement |

|---|---|---|---|---|---|

| i7-9700k | dictionary compress |

enwik8 -B1K |

125 MB/s | 165 MB/s | +32% |

| Galaxy S22 | dictionary compress |

enwik8 -B1K |

138 MB/s | 166 MB/s | +20% |

| M1 Pro | dictionary compress |

enwik8 -B1K |

155 MB/s | 195 MB/s | +25 % |

There are a few more scenario-specifics improvements listed in the changelog section below.

I/O Performance improvements

The 1.5.4 release improves IO performance of zstd CLI, by using system buffers (macos) and adding a new asynchronous I/O capability, enabled by default on large files (when threading is available). The user can also explicitly control this capability with the --[no-]asyncio flag . These new threads remove the need to block on IO operations. The impact is mostly noticeable when decompressing large files (>= a few MBs), though exact outcome depends on environment and run conditions.

Decompression speed gets significant gains due to its single-threaded serial nature and the high speeds involved. In some cases we observe up to double performance improvement (local Mac machines) and a wide +15-45% benefit on Intel Linux servers (see table for details).

On the compression side of things, we’ve measured up to 5% improvements. The impact is lower because compression is already partially asynchronous via the internal MT mode (see release v1.3.4).

The following table shows the elapsed run time for decompressions of silesia and enwik8 on several platforms - some Skylake-era Linux servers and an M1 MacbookPro. It compares the time it takes for version v1.5.2 to version v1.5.4 with asyncio on and off.

| platform | corpus | v1.5.2 |

v1.5.4-no-asyncio |

v1.5.4 |

Improvement |

|---|---|---|---|---|---|

| Xeon D-2191A CentOS8 | enwik8 |

280 MB/s | 280 MB/s | 324 MB/s | +16% |

| Xeon D-2191A CentOS8 | silesia.tar |

303 MB/s | 302 MB/s | 386 MB/s | +27% |

| i7-1165g7 win10 | enwik8 |

270 MB/s | 280 MB/s | 350 MB/s | +27% |

| i7-1165g7 win10 | silesia.tar |

450 MB/s | 440 MB/s | 580 MB/s | +28% |

| i7-9700K Ubuntu20 | enwik8 |

600 MB/s | 604 MB/s | 829 MB/s | +38% |

| i7-9700K Ubuntu20 | silesia.tar |

683 MB/s | 678 MB/s | 991 MB/s | +45% |

| Galaxy S22 | enwik8 |

360 MB/s | 420 MB/s | 515 MB/s | +70% |

| Galaxy S22 | silesia.tar |

310 MB/s | 320 MB/s | 580 MB/s | +85% |

| MBP M1 | enwik8 |

428 MB/s | 734 MB/s | 815 MB/s | +90% |

| MBP M1 | silesia.tar |

465 MB/s | 875 MB/s | 1001 MB/s | +115% |

Support of externally-defined sequence producers

libzstd can now support external sequence producers via a new advanced registration function ZSTD_registerSequenceProducer() (#3333).

This API allows users to provide their own custom sequence producer which libzstd invokes to process each block. The produced list of sequences (literals and matches) is then post-processed by libzstd to produce valid compressed blocks.

This block-level offload API is a more granular complement of the existing frame-level offload API compressSequences() (introduced in v1.5.1). It offers an easier migration story for applications already integrated with libzstd: the user application continues to invoke the same compression functions ZSTD_compress2() or ZSTD_compressStream2() as usual, and transparently benefits from the specific properties of the external sequence producer. For example, the sequence producer could be tuned to take advantage of known characteristics of the input, to offer better speed / ratio.

One scenario that becomes possible is to combine this capability with hardware-accelerated matchfinders, such as the Intel® QuickAssist accelerator (Intel® QAT) provided in server CPUs such as the 4th Gen Intel® Xeon® Scalable processors (previously codenamed Sapphire Rapids). More details to be provided in future communications.

Change Log

perf: +20% faster huffman decompression for targets that can't compile x64 assembly (#3449, @terrelln)

perf: up to +10% faster streaming compression at levels 1-2 (#3114, @embg)

perf: +4-13% for levels 5-12 by optimizing function generation (#3295, @terrelln)

pref: +3-11% compression speed for arm target (#3199, #3164, #3145, #3141, #3138, @JunHe77 and #3139, #3160, @danlark1)

perf: +5-30% faster dictionary compression at levels 1-4 (#3086, #3114, #3152, @embg)

perf: +10-20% cold dict compression speed by prefetching CDict tables (#3177, @embg)

perf: +1% faster compression by removing a branch in ZSTD_fast_noDict (#3129, @felixhandte)

perf: Small compression ratio improvements in high compression mode (#2983, #3391, @Cyan4973 and #3285, #3302, @daniellerozenblit)

perf: small speed improvement by better detecting STATIC_BMI2 for clang (#3080, @TocarIP)

perf: Improved streaming performance when ZSTD_c_stableInBuffer is set (#2974, @Cyan4973)

cli: Asynchronous I/O for improved cli speed (#2975, #2985, #3021, #3022, @yoniko)

cli: Change zstdless behavior to align with zless (#2909, @binhdvo)

cli: Keep original file if -c or --stdout is given (#3052, @dirkmueller)

cli: Keep original files when result is concatenated into a single output with -o (#3450, @Cyan4973)

cli: Preserve Permissions and Ownership of regular files (#3432, @felixhandte)

cli: Print zlib/lz4/lzma library versions with -vv (#3030, @terrelln)

cli: Print checksum value for single frame files with -lv (#3332, @Cyan4973)

cli: Print dictID when present with -lv (#3184, @htnhan)

cli: when stderr is not the console, disable status updates, but preserve final summary (#3458, @Cyan4973)

cli: support --best and --no-name in gzip compatibility mode (#3059, @dirkmueller)

cli: support for posix high resolution timer clock_gettime(), for improved benchmark accuracy (#3423, @Cyan4973)

cli: improved help/usage (-h, -H) formatting (#3094, @dirkmueller and #3385, @jonpalmisc)

cli: Fix better handling of bogus numeric values (#3268, @ctkhanhly)

cli: Fix input consists of multiple files and stdin (#3222, @yoniko)

cli: Fix tiny files passthrough (#3215, @cgbur)

cli: Fix for -r on empty directory (#3027, @brailovich)

cli: Fix empty string as argument for --output-dir-* (#3220, @embg)

cli: Fix decompression memory usage reported by -vv --long (#3042, @u1f35c, and #3232, @zengyijing)

cli: Fix infinite loop when empty input is passed to trainer (#3081, @terrelln)

cli: Fix --adapt doesn't work when --no-progress is also set (#3354, @terrelln)

api: Support for External Sequence Producer (#3333, @embg)

api: Support for in-place decompression (#3432, @terrelln)

api: New ZSTD_CCtx_setCParams() function, set all parameters defined in a ZSTD_compressionParameters structure (#3403, @Cyan4973)

api: Streaming decompression detects incorrect header ID sooner (#3175, @Cyan4973)

api: Window size resizing optimization for edge case (#3345, @daniellerozenblit)

api: More accurate error codes for busy-loop scenarios (#3413, #3455, @Cyan4973)

api: Fix limit overflow in compressBound and decompressBound (#3362, #3373, Cyan4973) reported by @nigeltao

api: Deprecate several advanced experimental functions: streaming (#3408, @embg), copy (#3196, @mileshu)

bug: Fix corruption that rarely occurs in 32-bit mode with wlog=25 (#3361, @terrelln)

bug: Fix for block-splitter (#3033, @Cyan4973)

bug: Fixes for Sequence Compression API (#3023, #3040, @Cyan4973)

bug: Fix leaking thread handles on Windows (#3147, @animalize)

bug: Fix timing issues with cmake/meson builds (#3166, #3167, #3170, @Cyan4973)

build: Allow user to select legacy level for cmake (#3050, @shadchin)

build: Enable legacy support by default in cmake (#3079, @niamster)

build: Meson build script improvements (#3039, #3120, #3122, #3327, #3357, @eli-schwartz and #3276, @neheb)

build: Add aarch64 to supported architectures for zstd_trace (#3054, @ooosssososos)

build: support AIX architecture (#3219, @qiongsiwu)

build: Fix ZSTD_LIB_MINIFY build macro, which now reduces static library size by half (#3366, @terrelln)

build: Fix Windows issues with Multithreading translation layer (#3364, #3380, @yoniko) and ARM64 target (#3320, @cwoffenden)

build: Fix cmake script (#3382, #3392, @terrelln and #3252 @Tachi107 and #3167 @Cyan4973)

doc: Updated man page, providing more details for --train mode (#3112, @Cyan4973)

doc: Add decompressor errata document (#3092, @terrelln)

misc: Enable Intel CET (#2992, #2994, @hjl-tools)

misc: Fix contrib/ seekable format (#3058, @yhoogstrate and #3346, @daniellerozenblit)

misc: Improve speed of the one-file library generator (#3241, @wahern and #3005, @cwoffenden)

PR list (generated by Github)

- x86-64: Enable Intel CET by @hjl-tools in https://github.com/facebook/zstd/pull/2992

- Add GitHub Action Checking that Zstd Runs Successfully Under CET by @felixhandte in https://github.com/facebook/zstd/pull/3015

- [opt] minor compression ratio improvement by @Cyan4973 in https://github.com/facebook/zstd/pull/2983

- Simplify HUF_decompress4X2_usingDTable_internal_bmi2_asm_loop by @WojciechMula in https://github.com/facebook/zstd/pull/3013

- Async write for decompression by @yoniko in https://github.com/facebook/zstd/pull/2975

- ZSTD CLI: Use buffered output by @yoniko in https://github.com/facebook/zstd/pull/2985

- Use faster Python script to amalgamate by @cwoffenden in https://github.com/facebook/zstd/pull/3005

- Change zstdless behavior to align with zless by @binhdvo in https://github.com/facebook/zstd/pull/2909

- AsyncIO compression part 1 - refactor of existing asyncio code by @yoniko in https://github.com/facebook/zstd/pull/3021

- Converge sumtype (offset | repcode) numeric representation towards offBase by @Cyan4973 in https://github.com/facebook/zstd/pull/2965

- fix sequence compression API in Explicit Delimiter mode by @Cyan4973 in https://github.com/facebook/zstd/pull/3023

- Lazy parameters adaptation (part 1 - ZSTD_c_stableInBuffer) by @Cyan4973 in https://github.com/facebook/zstd/pull/2974

- Print zlib/lz4/lzma library versions in verbose version output by @terrelln in https://github.com/facebook/zstd/pull/3030

- fix for -r on empty directory by @brailovich in https://github.com/facebook/zstd/pull/3027

- Add new CLI testing platform by @terrelln in https://github.com/facebook/zstd/pull/3020

- AsyncIO compression part 2 - added async read and asyncio to compression code by @yoniko in https://github.com/facebook/zstd/pull/3022

- Macos playtest envvars fix by @yoniko in https://github.com/facebook/zstd/pull/3035

- Fix required decompression memory usage reported by -vv + --long by @u1f35c in https://github.com/facebook/zstd/pull/3042

- Select legacy level for cmake by @shadchin in https://github.com/facebook/zstd/pull/3050

- [trace] Add aarch64 to supported architectures for zstd_trace by @ooosssososos in https://github.com/facebook/zstd/pull/3054

- New features for largeNbDicts benchmark by @embg in https://github.com/facebook/zstd/pull/3063

- Use helper function for bit manipulations. by @TocarIP in https://github.com/facebook/zstd/pull/3075

- [programs] Fix infinite loop when empty input is passed to trainer by @terrelln in https://github.com/facebook/zstd/pull/3081

- Enable STATIC_BMI2 for gcc/clang by @TocarIP in https://github.com/facebook/zstd/pull/3080

- build:cmake: enable ZSTD legacy support by default by @niamster in https://github.com/facebook/zstd/pull/3079

- Implement more gzip compatibility (#3037) by @dirkmueller in https://github.com/facebook/zstd/pull/3059

- [doc] Add decompressor errata document by @terrelln in https://github.com/facebook/zstd/pull/3092

- Handle newer less versions in zstdless testing by @dirkmueller in https://github.com/facebook/zstd/pull/3093

- [contrib][linux] Fix a warning in zstd_reset_cstream() by @cyberknight777 in https://github.com/facebook/zstd/pull/3088

- Software pipeline for ZSTD_compressBlock_fast_dictMatchState (+5-6% compression speed) by @embg in https://github.com/facebook/zstd/pull/3086

- Keep original file if -c or --stdout is given by @dirkmueller in https://github.com/facebook/zstd/pull/3052

- Split help in long and short version, cleanup formatting by @dirkmueller in https://github.com/facebook/zstd/pull/3094

- updated man page, providing more details for --train mode by @Cyan4973 in https://github.com/facebook/zstd/pull/3112

- Meson fixups for Windows by @eli-schwartz in https://github.com/facebook/zstd/pull/3039

- meson: for internal linkage, link to both libzstd and a static copy of it by @eli-schwartz in https://github.com/facebook/zstd/pull/3122

- Software pipeline for ZSTD_compressBlock_fast_extDict (+4-9% compression speed) by @embg in https://github.com/facebook/zstd/pull/3114

- ZSTD_fast_noDict: Avoid Safety Check When Writing

ip1into Table by @felixhandte in https://github.com/facebook/zstd/pull/3129 - Correct and clarify repcode offset history logic by @embg in https://github.com/facebook/zstd/pull/3127

- [lazy] Optimize ZSTD_row_getMatchMask for levels 8-10 for ARM by @danlark1 in https://github.com/facebook/zstd/pull/3139

- fix leaking thread handles on Windows by @animalize in https://github.com/facebook/zstd/pull/3147

- Remove expensive assert in --rsyncable hot loop by @terrelln in https://github.com/facebook/zstd/pull/3154

- Bugfix for huge dictionaries by @embg in https://github.com/facebook/zstd/pull/3157

- common: apply two stage copy to aarch64 by @JunHe77 in https://github.com/facebook/zstd/pull/3145

- dec: adjust seqSymbol load on aarch64 by @JunHe77 in https://github.com/facebook/zstd/pull/3141

- Fix big endian ARM NEON path by @danlark1 in https://github.com/facebook/zstd/pull/3160

- [contrib] largeNbDicts bugfix + improvements by @embg in https://github.com/facebook/zstd/pull/3161

- display a warning message when using C90 clock_t by @Cyan4973 in https://github.com/facebook/zstd/pull/3166

- remove explicit standard setting from cmake script by @Cyan4973 in https://github.com/facebook/zstd/pull/3167

- removed gnu99 statement from meson recipe by @Cyan4973 in https://github.com/facebook/zstd/pull/3170

- "Short cache" optimization for level 1-4 DMS (+5-30% compression speed) by @embg in https://github.com/facebook/zstd/pull/3152

- Streaming decompression can detect incorrect header ID sooner by @Cyan4973 in https://github.com/facebook/zstd/pull/3175

- Add prefetchCDictTables CCtxParam (+10-20% cold dict compression speed) by @embg in https://github.com/facebook/zstd/pull/3177

- Fix ZSTD_BUILD_TESTS=ON with MSVC by @nocnokneo in https://github.com/facebook/zstd/pull/3180

- zstd -lv to show dictID by @htnhan in https://github.com/facebook/zstd/pull/3184

- Intial commit to address 3090. Added support to decompress empty block. by @udayanbapat in https://github.com/facebook/zstd/pull/3118

- [largeNbDicts] Second try at fixing decompression segfault to always create compressInstructions by @zhuhan0 in https://github.com/facebook/zstd/pull/3209

- Clarify benchmark chunking docstring by @embg in https://github.com/facebook/zstd/pull/3197

- decomp: add prefetch for matched seq on aarch64 by @JunHe77 in https://github.com/facebook/zstd/pull/3164

- lib: add hint to generate more pipeline friendly code by @JunHe77 in https://github.com/facebook/zstd/pull/3138

- [AIX] Fix Compiler Flags and Bugs on AIX to Pass All Tests by @qiongsiwu in https://github.com/facebook/zstd/pull/3219

- zlibWrapper: Update for zlib 1.2.12 by @orbea in https://github.com/facebook/zstd/pull/3217

- Fix small file passthrough by @cgbur in https://github.com/facebook/zstd/pull/3215

- Add warning when multi-thread decompression is requested by @tomcwang in https://github.com/facebook/zstd/pull/3208

- stdin + multiple file fixes by @yoniko in https://github.com/facebook/zstd/pull/3222

- [AIX] Fixing hash4Ptr for Big Endian Systems by @qiongsiwu in https://github.com/facebook/zstd/pull/3227

- Disallow empty string as argument for --output-dir-flat and --output-dir-mirror by @embg in https://github.com/facebook/zstd/pull/3220

- Deprecate ZSTD_getDecompressedSize() by @terrelln in https://github.com/facebook/zstd/pull/3225

- [T124890272] Mark 2 Obsolete Functions(ZSTD_copy*Ctx) Deprecated in Zstd by @mileshu in https://github.com/facebook/zstd/pull/3196

- fileio_types.h : avoid dependency on mem.h by @Cyan4973 in https://github.com/facebook/zstd/pull/3232

- fixed: verbose output prints wrong value for

wlogwhen doing--longby @zengyijing in https://github.com/facebook/zstd/pull/3226 - Add explicit --pass-through flag and default to enabled for *cat by @terrelln in https://github.com/facebook/zstd/pull/3223

- Document pass-through behavior by @cgbur in https://github.com/facebook/zstd/pull/3242

- restore combine.sh bash performance while still sticking to POSIX by @wahern in https://github.com/facebook/zstd/pull/3241

- Benchmark program for sequence compression API by @embg in https://github.com/facebook/zstd/pull/3257

- streamline

make cleanlist maintenance by adding aCLEANvariable by @Cyan4973 in https://github.com/facebook/zstd/pull/3256 - drop

-Eflag insedby @haampie in https://github.com/facebook/zstd/pull/3245 - compress:check more bytes to reduce

ZSTD_countcall by @JunHe77 in https://github.com/facebook/zstd/pull/3199 - build(cmake): improve pkg-config generation by @Tachi107 in https://github.com/facebook/zstd/pull/3252

- Fix for

zstdCLI accepts bogus values for numeric parameters by @ctkhanhly in https://github.com/facebook/zstd/pull/3268 - ci: test pkg-config file by @Tachi107 in https://github.com/facebook/zstd/pull/3267

- Move ZSTD_DEPRECATED before ZSTDLIB_API/ZSTDLIB_STATIC_API for

clangby @MaskRay in https://github.com/facebook/zstd/pull/3273 - Enable OpenSSF Scorecard Action by @felixhandte in https://github.com/facebook/zstd/pull/3277

- fixed zstd-pgo target for GCC by @ilyakurdyukov in https://github.com/facebook/zstd/pull/3281

- Cleaner threadPool initialization by @Cyan4973 in https://github.com/facebook/zstd/pull/3288

- Make fuzzing work without ZSTD_MULTITHREAD by @danlark1 in https://github.com/facebook/zstd/pull/3291

- Optimal huf depth by @daniellerozenblit in https://github.com/facebook/zstd/pull/3285

- Make ZSTD_getDictID_fromDDict() Read DictID from DDict by @felixhandte in https://github.com/facebook/zstd/pull/3290

- [contrib][linux-kernel] Generate SPDX license identifiers by @ojeda in https://github.com/facebook/zstd/pull/3294

- [lazy] Use switch instead of indirect function calls, improving compression speed by @terrelln in https://github.com/facebook/zstd/pull/3295

- [linux] Add zstd_common module by @terrelln in https://github.com/facebook/zstd/pull/3292

- Complete migration of ZSTD_c_enableLongDistanceMatching to ZSTD_paramSwitch_e framework by @embg in https://github.com/facebook/zstd/pull/3321

- meson: get version up front by @eli-schwartz in https://github.com/facebook/zstd/pull/3327

- Fix for MSVC C4267 warning on ARM64 (which becomes error C2220 with /WX) by @cwoffenden in https://github.com/facebook/zstd/pull/3320

- Enable dependabot for automatic GitHub Actions updates by @DimitriPapadopoulos in https://github.com/facebook/zstd/pull/3284

- Print checksum value for single frame files in cli with -v -l options by @Cyan4973 in https://github.com/facebook/zstd/pull/3332

- Fix window size resizing optimization for edge case by @daniellerozenblit in https://github.com/facebook/zstd/pull/3345

- [linux-kernel] Fix stack detection for newer gcc by @terrelln in https://github.com/facebook/zstd/pull/3348

- Reserve two fields in ZSTD_frameHeader by @embg in https://github.com/facebook/zstd/pull/3349

- Fix seekable format for empty string by @daniellerozenblit in https://github.com/facebook/zstd/pull/3346

- meson: make backtrace dependency on execinfo for musl libc compatibility by @neheb in https://github.com/facebook/zstd/pull/3276

- Refactor progress bar & summary line logic by @terrelln in https://github.com/facebook/zstd/pull/2984

- Use

__attribute__((aligned(1)))for unaligned access by @Hello71 in https://github.com/facebook/zstd/pull/2881 - Separate parameter adaption from display update rate by @terrelln in https://github.com/facebook/zstd/pull/3354

- [decompress] Fix UB nullptr addition & improve fuzzer by @terrelln in https://github.com/facebook/zstd/pull/3356

- [legacy] Simplify legacy codebase by removing esoteric memory accesses and only use memcpy by @terrelln in https://github.com/facebook/zstd/pull/3355

- Fix corruption that rarely occurs in 32-bit mode with wlog=25 by @terrelln in https://github.com/facebook/zstd/pull/3361

- meson: partial fix for building pzstd on MSVC by @eli-schwartz in https://github.com/facebook/zstd/pull/3357

- [CI] Re-enable versions-test by @terrelln in https://github.com/facebook/zstd/pull/3371

- [api][visibility] Make the visibility macros more consistent by @terrelln in https://github.com/facebook/zstd/pull/3363

- [build] Fix ZSTD_LIB_MINIFY build option by @terrelln in https://github.com/facebook/zstd/pull/3366

- [zdict] Fix static linking only include guards by @terrelln in https://github.com/facebook/zstd/pull/3372

- check potential overflow of compressBound() by @Cyan4973 in https://github.com/facebook/zstd/pull/3362

- decompressBound tests and fix by @Cyan4973 in https://github.com/facebook/zstd/pull/3373

- Meson test fixups by @eli-schwartz in https://github.com/facebook/zstd/pull/3120

- [pzstd] Fixes for Windows build by @terrelln in https://github.com/facebook/zstd/pull/3380

- Windows MT layer bug fixes by @yoniko in https://github.com/facebook/zstd/pull/3364

- Update Copyright Comments by @felixhandte in https://github.com/facebook/zstd/pull/3173

- [docs] Clarify dictionary loading documentation by @terrelln in https://github.com/facebook/zstd/pull/3381

- [build][cmake] Fix cmake with custom assembler by @terrelln in https://github.com/facebook/zstd/pull/3382

- Pin actions/checkout Dependency to Specific Commit Hash by @felixhandte in https://github.com/facebook/zstd/pull/3384

- Improve help/usage (

-h,-H) formatting by @jonpalmisc in https://github.com/facebook/zstd/pull/3385 - [cmake] Add noexecstack to compiler/linker flags by @terrelln in https://github.com/facebook/zstd/pull/3392

- Fix

-Wdocumentationby @terrelln in https://github.com/facebook/zstd/pull/3393 - Support decompression of compressed blocks of size ZSTD_BLOCKSIZE_MAX by @Cyan4973 in https://github.com/facebook/zstd/pull/3399

- spec update : require minimum nb of literals for 4-streams mode by @Cyan4973 in https://github.com/facebook/zstd/pull/3398

- External matchfinder API by @embg in https://github.com/facebook/zstd/pull/3333

- New

ZSTD_CCtx_setCParams()entry point, to set all parameters defined in aZSTD_compressionParametersstructure by @Cyan4973 in https://github.com/facebook/zstd/pull/3403 - Move deprecated annotation before static to allow C++ compilation for clang by @danlark1 in https://github.com/facebook/zstd/pull/3400

- Optimal huff depth speed improvements by @daniellerozenblit in https://github.com/facebook/zstd/pull/3302

- improve compression ratio of small alphabets by @Cyan4973 in https://github.com/facebook/zstd/pull/3391

- Fix fuzzing with ZSTD_MULTITHREAD by @danlark1 in https://github.com/facebook/zstd/pull/3417

- minor refactoring for timefn by @Cyan4973 in https://github.com/facebook/zstd/pull/3413

- Add support for in-place decompression by @terrelln in https://github.com/facebook/zstd/pull/3421

- fix when nb of literals is very small by @Cyan4973 in https://github.com/facebook/zstd/pull/3419

- Deprecate advanced streaming functions by @embg in https://github.com/facebook/zstd/pull/3408

- Disable Custom ASAN/MSAN Poisoning on MinGW Builds by @felixhandte in https://github.com/facebook/zstd/pull/3424

- [tests] Fix version test determinism by @terrelln in https://github.com/facebook/zstd/pull/3422

- Refactor

timefnunit, restore support forclock_gettime()by @Cyan4973 in https://github.com/facebook/zstd/pull/3423 - Fuzz on maxBlockSize by @daniellerozenblit in https://github.com/facebook/zstd/pull/3418

- Fuzz the External Matchfinder API by @embg in https://github.com/facebook/zstd/pull/3437

- Cap hashLog & chainLog to ensure that we only use 32 bits of hash by @terrelln in https://github.com/facebook/zstd/pull/3438

- [versions-test] Work around bug in dictionary builder for older versions by @terrelln in https://github.com/facebook/zstd/pull/3436

- added c89 build test to CI by @Cyan4973 in https://github.com/facebook/zstd/pull/3435

- added cygwin tests to Github Actions by @Cyan4973 in https://github.com/facebook/zstd/pull/3431

- Huffman refactor by @terrelln in https://github.com/facebook/zstd/pull/3434

- Fix bufferless API with attached dictionary by @terrelln in https://github.com/facebook/zstd/pull/3441

- Test PGO Builds by @felixhandte in https://github.com/facebook/zstd/pull/3442

- Fix CLI Handling of Permissions and Ownership by @felixhandte in https://github.com/facebook/zstd/pull/3432

- Fix -Wstringop-overflow warning by @terrelln in https://github.com/facebook/zstd/pull/3440

- refactor : --rm ignored with stdout by @Cyan4973 in https://github.com/facebook/zstd/pull/3443

- Fix sequence validation and seqStore bounds check by @daniellerozenblit in https://github.com/facebook/zstd/pull/3439

- Fix ZSTD_estimate* and ZSTD_initCStream() docs by @embg in https://github.com/facebook/zstd/pull/3448

- Fix 32-bit build errors in zstd seekable format by @daniellerozenblit in https://github.com/facebook/zstd/pull/3452

- Fuzz large offsets through sequence compression api by @daniellerozenblit in https://github.com/facebook/zstd/pull/3447

- [huf] Add generic C versions of the fast decoding loops by @terrelln in https://github.com/facebook/zstd/pull/3449

- Provide more accurate error codes for busy-loop scenarios by @Cyan4973 in https://github.com/facebook/zstd/pull/3455

- disable --rm on -o command by @Cyan4973 in https://github.com/facebook/zstd/pull/3450

- [Bugfix] CLI row hash flags set the wrong values by @yoniko in https://github.com/facebook/zstd/pull/3457

- [huf] Fix bug in fast C decoders by @terrelln in https://github.com/facebook/zstd/pull/3459

- Disable status updates when

stderris not the console by @Cyan4973 in https://github.com/facebook/zstd/pull/3458 - fix long offset resolution by @daniellerozenblit in https://github.com/facebook/zstd/pull/3460

- Simplify 32-bit long offsets decoding logic by @terrelln in https://github.com/facebook/zstd/pull/3467

New Contributors

- @WojciechMula made their first contribution in https://github.com/facebook/zstd/pull/3013

- @trixirt made their first contribution in https://github.com/facebook/zstd/pull/3026

- @brailovich made their first contribution in https://github.com/facebook/zstd/pull/3027

- @u1f35c made their first contribution in https://github.com/facebook/zstd/pull/3042

- @shadchin made their first contribution in https://github.com/facebook/zstd/pull/3050

- @ooosssososos made their first contribution in https://github.com/facebook/zstd/pull/3054

- @TocarIP made their first contribution in https://github.com/facebook/zstd/pull/3075

- @xry111 made their first contribution in https://github.com/facebook/zstd/pull/3084

- @niamster made their first contribution in https://github.com/facebook/zstd/pull/3079

- @dirkmueller made their first contribution in https://github.com/facebook/zstd/pull/3059

- @cyberknight777 made their first contribution in https://github.com/facebook/zstd/pull/3088

- @dpelle made their first contribution in https://github.com/facebook/zstd/pull/3095

- @paulmenzel made their first contribution in https://github.com/facebook/zstd/pull/3108

- @cuishuang made their first contribution in https://github.com/facebook/zstd/pull/3117

- @averred made their first contribution in https://github.com/facebook/zstd/pull/3135

- @JunHe77 made their first contribution in https://github.com/facebook/zstd/pull/3145

- @htnhan made their first contribution in https://github.com/facebook/zstd/pull/3184

- @udayanbapat made their first contribution in https://github.com/facebook/zstd/pull/3118

- @zhuhan0 made their first contribution in https://github.com/facebook/zstd/pull/3205

- @mgord9518 made their first contribution in https://github.com/facebook/zstd/pull/3218

- @qiongsiwu made their first contribution in https://github.com/facebook/zstd/pull/3219

- @orbea made their first contribution in https://github.com/facebook/zstd/pull/3217

- @cgbur made their first contribution in https://github.com/facebook/zstd/pull/3215

- @tomcwang made their first contribution in https://github.com/facebook/zstd/pull/3208

- @mileshu made their first contribution in https://github.com/facebook/zstd/pull/3196

- @zengyijing made their first contribution in https://github.com/facebook/zstd/pull/3226

- @grossws made their first contribution in https://github.com/facebook/zstd/pull/3230

- @wahern made their first contribution in https://github.com/facebook/zstd/pull/3241

- @daniellerozenblit made their first contribution in https://github.com/facebook/zstd/pull/3258

- @DimitriPapadopoulos made their first contribution in https://github.com/facebook/zstd/pull/3259

- @sashashura made their first contribution in https://github.com/facebook/zstd/pull/3264

- @haampie made their first contribution in https://github.com/facebook/zstd/pull/3247

- @Tachi107 made their first contribution in https://github.com/facebook/zstd/pull/3252

- @ctkhanhly made their first contribution in https://github.com/facebook/zstd/pull/3268

- @MaskRay made their first contribution in https://github.com/facebook/zstd/pull/3273

- @ilyakurdyukov made their first contribution in https://github.com/facebook/zstd/pull/3281

- @ojeda made their first contribution in https://github.com/facebook/zstd/pull/3294

- @GermanAizek made their first contribution in https://github.com/facebook/zstd/pull/3304

- @joycebrum made their first contribution in https://github.com/facebook/zstd/pull/3309

- @yiyuaner made their first contribution in https://github.com/facebook/zstd/pull/3300

- @nmoinvaz made their first contribution in https://github.com/facebook/zstd/pull/3289

- @jonpalmisc made their first contribution in https://github.com/facebook/zstd/pull/3385

Full Automated Changelog: https://github.com/facebook/zstd/compare/v1.5.2...v1.5.4

Published by felixhandte over 2 years ago

Zstandard v1.5.2 is a bug-fix release, addressing issues that were raised with the v1.5.1 release.

In particular, as a side-effect of the inclusion of assembly code in our source tree, binary artifacts were being marked as needing an executable stack on non-amd64 architectures. This release corrects that issue. More context is available in #2963.

This release also corrects a performance regression that was introduced in v1.5.0 that slows down compression of very small data when using the streaming API. Issue #2966 tracks that topic.

In addition there are a number of smaller improvements and fixes.

Full Changelist

- Fix zstd-static output name with MINGW/Clang by @MehdiChinoune in https://github.com/facebook/zstd/pull/2947

- storeSeq & mlBase : clarity refactoring by @Cyan4973 in https://github.com/facebook/zstd/pull/2954

- Fix mini typo by @fwessels in https://github.com/facebook/zstd/pull/2960

- Refactor offset+repcode sumtype by @Cyan4973 in https://github.com/facebook/zstd/pull/2962

- meson: fix MSVC support by @eli-schwartz in https://github.com/facebook/zstd/pull/2951

- fix performance issue in scenario #2966 (part 1) by @Cyan4973 in https://github.com/facebook/zstd/pull/2969

- [meson] Explicitly disable assembly for non clang/gcc copmilers by @terrelln in https://github.com/facebook/zstd/pull/2972

- Mark Huffman Decoder Assembly

noexecstackon All Architectures by @felixhandte in https://github.com/facebook/zstd/pull/2964 - Improve Module Map File by @felixhandte in https://github.com/facebook/zstd/pull/2953

- Remove Dependencies to Allow the Zstd Binary to Dynamically Link to the Library by @felixhandte in https://github.com/facebook/zstd/pull/2977

- [opt] Fix oss-fuzz bug in optimal parser by @terrelln in https://github.com/facebook/zstd/pull/2980

- [license] Fix license header of huf_decompress_amd64.S by @terrelln in https://github.com/facebook/zstd/pull/2981

- Fix

stderrprogress logging for decompression by @terrelln in https://github.com/facebook/zstd/pull/2982 - Fix tar test cases by @sunwire in https://github.com/facebook/zstd/pull/2956

- Fixup MSVC source file inclusion for cmake builds by @hmaarrfk in https://github.com/facebook/zstd/pull/2957

- x86-64: Hide internal assembly functions by @hjl-tools in https://github.com/facebook/zstd/pull/2993

- Prepare v1.5.2 by @felixhandte in https://github.com/facebook/zstd/pull/2987

- Documentation and minor refactor to clarify MT memory management by @embg in https://github.com/facebook/zstd/pull/3000

- Avoid updating timestamps when the destination is

stdoutby @floppym in https://github.com/facebook/zstd/pull/2998 - [build][asm] Pass ASFLAGS to the assembler instead of CFLAGS by @terrelln in https://github.com/facebook/zstd/pull/3009

- Update CI documentation by @embg in https://github.com/facebook/zstd/pull/2999

New Contributors

- @MehdiChinoune made their first contribution in https://github.com/facebook/zstd/pull/2947

- @fwessels made their first contribution in https://github.com/facebook/zstd/pull/2960

- @sunwire made their first contribution in https://github.com/facebook/zstd/pull/2956

- @hmaarrfk made their first contribution in https://github.com/facebook/zstd/pull/2957

- @floppym made their first contribution in https://github.com/facebook/zstd/pull/2998

Full Changelog: https://github.com/facebook/zstd/compare/v1.5.1...v1.5.2

Published by Cyan4973 almost 3 years ago

Notice : it has been brought to our attention that the v1.5.1 library might be built with an executable stack on non-x64 architectures, which could end up being flagged as problematic by some systems with thorough security settings which disallow executable stack. We are currently reviewing the issue. Be aware of it if you build libzstd for non-x64 architecture.

Zstandard v1.5.1 is a maintenance release, bringing a good number of small refinements to the project. It also offers a welcome crop of performance improvements, as detailed below.

Performance Improvements

Speed improvements for fast compression (levels 1–4)

PRs #2749, #2774, and #2921 refactor single-segment compression for ZSTD_fast and ZSTD_dfast, which back compression levels 1 through 4 (as well as the negative compression levels). Speedups in the ~3-5% range are observed. In addition, the compression ratio of ZSTD_dfast (levels 3 and 4) is slightly improved.

Rebalanced middle compression levels

v1.5.0 introduced major speed improvements for mid-level compression (from 5 to 12), while preserving roughly similar compression ratio. As a consequence, the speed scale became tilted towards faster speed. Unfortunately, the difference between successive levels was no longer regular, and there is a large performance gap just after the impacted range, between levels 12 and 13.

v1.5.1 tries to rebalance parameters so that compression levels can be roughly associated to their former speed budget. Consequently, v1.5.1 mid compression levels feature speeds closer to former v1.4.9 (though still sensibly faster) and receive in exchange an improved compression ratio, as shown in below graph.

Note that, since middle levels only experience a rebalancing, save some special cases, no significant performance differences between versions v1.5.0 and v1.5.1 should be expected: levels merely occupy different positions on the same curve. The situation is a bit different for fast levels (1-4), for which v1.5.1 delivers a small but consistent performance benefit on all platforms, as described in previous paragraph.

Huffman Improvements

Our Huffman code was significantly revamped in this release. Both encoding and decoding speed were improved. Additionally, encoding speed for small inputs was improved even further. Speed is measured on the Silesia corpus by compressing with level 1 and extracting the literals left over after compression. Then compressing and decompressing the literals from each block. Measurements are done on an Intel i9-9900K @ 3.6 GHz.

| Compiler | Scenario | v1.5.0 Speed | v1.5.1 Speed | Delta |

|---|---|---|---|---|

| gcc-11 | Literal compression - 128KB block | 748 MB/s | 927 MB/s | +23.9% |

| clang-13 | Literal compression - 128KB block | 810 MB/s | 927 MB/s | +14.4% |

| gcc-11 | Literal compression - 4KB block | 223 MB/s | 321 MB/s | +44.0% |

| clang-13 | Literal compression - 4KB block | 224 MB/s | 310 MB/s | +38.2% |

| gcc-11 | Literal decompression - 128KB block | 1164 MB/s | 1500 MB/s | +28.8% |

| clang-13 | Literal decompression - 128KB block | 1006 MB/s | 1504 MB/s | +49.5% |

Overall impact on (de)compression speed depends on the compressibility of the data. Compression speed improves from 1-4%, and decompression speed improves from 5-15%.