netdata

The open-source observability platform everyone needs!

GPL-3.0 License

Bot releases are visible (Hide)

Published by netdatabot over 1 year ago

- Netdata Growth

-

Release Highlights

-

Dashboard Sections' Summary Tiles

Added summary tiles to most sections of the fully-automated dashboards, to provide an instant view of the most important metrics for each section. -

Silencing of Cloud Alert Notifications

Maintenance window coming up? Active issue being checked? Use the Alert notification silencing engine to mute your notifications. -

Machine Learning - Extended Training to 24 Hours

Netdata now trains multiple models per metric, to learn the behavior of each metric for the last 24 hours. Trained models are persisted on disk and are loaded back on Netdata restart. -

Rewritten SSL Support for the Agent

Netdata Agent now features a new SSL layer that allows it to reliably use SSL on all its features, including the API and Streaming.

-

Dashboard Sections' Summary Tiles

- Alerts and Notifications

- Visualizations / Charts and Dashboards

- Preliminary steps to split native packages

- Acknowledgements

- Contributions

- Deprecation notice

- Cloud recommended version

- Release meetup

- Support options

- Running survey

Netdata Growth

🚀 Our community growth is increasing steadily. ❤️ Thank you! Your love and acceptance give us the energy and passion to work harder to simplify and make monitoring easier, more effective and more fun to use.

- Over 63,000 GitHub Stars ⭐

- Over 1.5 million online nodes

- Almost 94 million sessions served

- Over 600 thousand total nodes in Netdata Cloud

Wow! Netdata Cloud is about to become the biggest and most scalable monitoring infra ever created!

Let the world know you love Netdata.

Give Netdata a ⭐ on GitHub now.

Motivate us to keep pushing forward!

Unlimited Docker Hub Pulls!

To help our community use Netdata more broadly, we just signed an agreement with Docker for the purchase of Rate Limit Removal, which will remove all Docker Hub pull limits for the Netdata repos at Docker Hub. We expect this add-on to be applied to our repos in the following few days, so that you will enjoy unlimited Docker Hub pulls of Netdata Docker images for free!

Release Highlights

Dashboard Sections' Summary Tiles

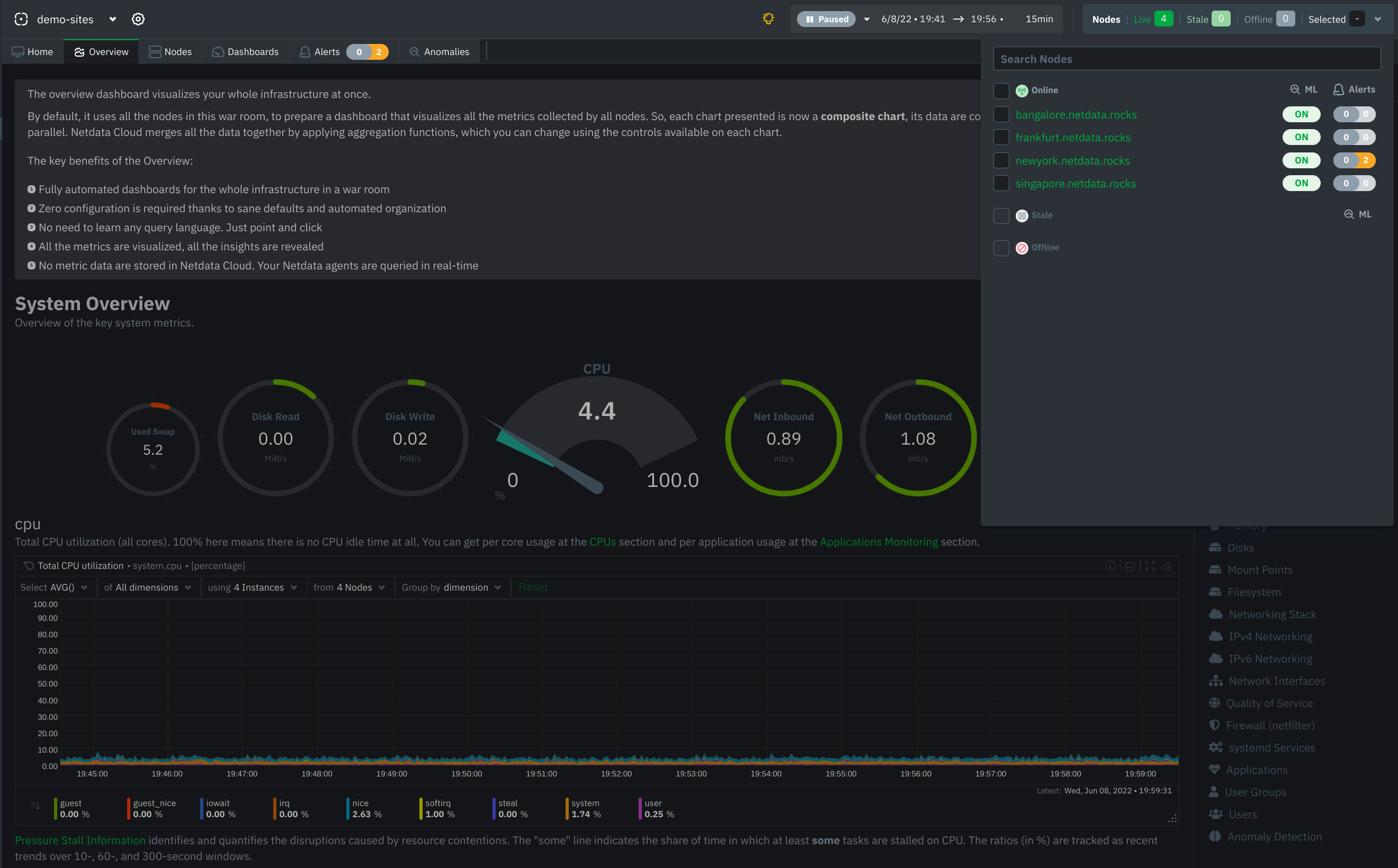

Netdata Cloud dashboards have been improved to provide instant summary tiles for most of their sections. This includes system overview, disks, network interfaces, memory, mysql, postgresql, nginx, apache, and dozens more.

To accomplish this, we extended the query engine of Netdata to support multiple grouping passes, so that queries like "sum metrics by label X, and then average by node" are now possible. At the same time we made room for presenting anomaly rates on them (vertical purple bar on the right) and significantly improved the tile placement algorithm to support multi-line summary headers and precise sizing and positioning, providing a look and feel like this:

The following chart tile types have been added:

- Donut

- Gauge

- Bar

- Trendline

- Number

- Pie chart

To improve the efficiency of using these tiles, each of these tiles supports the following interactive actions:

- Clicking the title of the tile scroll the dashboard to the data source chart, where you can slice, dice and filter the data based on which the tile was created.

- Hovering the tile with your mouse pointer, the NIDL (Nodes, Instances, Dimensions, Labels) framework buttons appear, allowing you to explore and filter the data set, right on the tile.

Some examples that you can see from the Netdata Demo space:

Silencing of Cloud Alert Notifications

Although Netdata Agent alerts support silencing, centrally dispatched alert notifications from Netdata Cloud were missing that feature. Today, we release alert notifications silencing rules for Netdata Cloud!

Silencing rules are applied on any combination of the following: users, rooms, nodes, host labels, contexts (charts), alert name, alert role. For the matching alerts, silencing can optionally have a starting date and time and/or an ending date time.

With this feature you can now easily setup silencing rules, which can be set to be applied immediately or at a defined schedule, allowing you to plan for upcoming schedule maintenance windows - see some examples here.

Read more about Silencing Alert notifications on our documentation.

Machine Learning - Extended Training to 24 Hours

Netdata trains ML models for each metric, using its past data. This allows Netdata to detect anomalous behaviors in metrics, based exclusively on the recent past data of the metric itself.

Before this release Netdata was training one model of each metric, learning the behavior of each metric during the last 4 hours. In the previous release we introduced persisting these models to disk and loading them back when Netdata restarts.

In this release we change the default ML settings to support multiple models per metric, maintaining multiple trained models per metric, covering the behavior of each metric for last 24 hours. All these models are now consulted automatically in order to decide if a data collection point is anomalous or not.

This has been implemented in a way to avoid introducing additional CPU overhead on Netdata agents. So, instead of training one model for 24 hours which would introduce significant query overhead on the server, we train each metric every 3 hours using the last 6 hours of data, and we keep 9 models per metric. The most recent model is consulted first during anomaly detection. Additional models are consulted as long as the previous ones predict an anomaly. So only when all 9 models agree that a data collection is anomalous, we mark the collected sample as anomalous in the database.

The impact of these changes is more accurate anomaly detection out of the box, with much fewer false positives.

You can read more about it in this deck presented during a recent office hours (office hours recording).

Rewritten SSL Support for the Agent

The SSL support at the Netdata Agent has been completely rewritten. The new code now reliably support SSL connections for both the Netdata internal web server and streaming. It is also easier to understand, troubleshoot and expand. At the same time performance has been improved by removing redundant checks.

During this process a long-standing bug on streaming connection timeouts has been identified and fixed, making streaming reliable and robust overall.

Alerts and Notifications

Mattermost notifications for Business Plan users

To keep building up on our set of existing alert notification methods we added Mattermost as another notification integration option on Netdata Cloud.

As part of our commitment to expanding our set of alert notification methods, Mattermost provides another reliable way to deliver alerts to your team, ensuring the continuity and reliability of your services.

Business Plan users can now configure Netdata Cloud to send alert notifications to their team on Mattermost.

Visualizations / Charts and Dashboards

Netdata Functions

On top of the work done on release v1.38, where we introduced real-time functions that enable you to trigger specific routines to be executed by a given Agent on demand. Our initial function provided detailed information on currently running processes on the node, effectively replacing top and iotop.

We have now added the capability to group your results by specific attributes. For example, on the Processes function you are now able to group the results by: Category, Cmd or User.

With this capability you can now get a consolidated view of your reported statistics over any of these attributes.

External plugin integration

The agent core has been improved when it comes to integration with external plugins. Under certain conditions, a failed plugin would not be correctly acknowledged by the agent resulting in a defunc (i.e. zombie) plugin process. This is now fixed.

Preliminary steps to split native packages

Starting with this release, our official DEB/RPM packages have been split so that each external data collection

plugin is in its own package instead of having everything bundled into a single package. We have previously had

our CUPS and FreeIPMI collectors split out like this, but this change extends that to almost all of our external

data collectors. This is the first step towards making these external collectors optional on installs that use

our native packages, which will in turn allow users to avoid installing things they don’t actually need.

Short-term, these external collectors are listed as required dependencies to ensure that updates work correctly. At

some point in the future almost all of them will be changed to be optional dependencies so that users can pick

and choose which ones they want installed.

This change also includes a large number of fixes for minor issues in our native packages, including better handling

of user accounts and file permissions and more prevalent usage of file capabilities to improve the security of

our native packages.

Acknowledgements

We would like to thank our dedicated, talented contributors that make up this amazing community. The time and expertise that you volunteer are essential to our success. We thank you and look forward to continuing to grow together to build a remarkable product.

- @n0099 for fixing typos in the documentation.

- @mochaaP for fixing cross-compiling issues.

- @jmphilippe for making control address configurable in python.d/tor.

- @TougeAI for documenting the "age" configuration option in python.d/smartd_log.

- @mochaaP for adding support of python-oracledb to python.d/oracledb.

Contributions

Collectors

Improvements

- Add parent_table label to table/index metrics (go.d/postgres) (#1199, @ilyam8)

- Make tables and indexes limit configurable (go.d/postgres) (#1200, @ilyam8)

- Add Hyper-V metrics (go.d/windows) (#1164, @thiagoftsm)

- Add "maps per core" config option (ebpf.plugin) (#14691, @thiagoftsm)

- Add plugin that collect metrics from /sys/fs/debugfs (debugfs.plugin) (#15017, @thiagoftsm)

- Add support of python-oracledb (python.d/oracledb) (#15074, @EricAndrechek)

- Make control address configurable (python.d/tor) (#15041, @jmphilippe)

- Make connection protocol configurable (python.d/oracledb) (#15104, @ilyam8)

- Add availability status chart and alarm (freeipmi.plugin) (#15151, @ilyam8)

- Improve error messages when legacy code is not installed (ebpf.plugin) (#15146, @thiagoftsm)

Bug fixes

- Fix handling of newlines in HELP (go.d/prometheus) (#1196, @ilyam8)

- Fix collection of bind mounts (diskspace.plugin) (#14831, @MrZammler)

- Fix collection of zero metrics if Zswap is disabled (debugfs.plugin) (#15054, @ilyam8)

Other

- Document the "age" configuration option (python.d/smartd_log) (#15171, @TougeAI)

- Send EXIT before exiting in (freeipmi.plugin, debugfs.plugin) (#15140, @ilyam8)

Documentation

- Add Mattermost cloud integration docs (#15141, @car12o)

- Update Events and Silencing Rules docs (#15134, @hugovalente-pm)

- Fix a typo in simple patterns readme (#15135, @n0099)

- Add netdata demo rooms to the list of demo urls (#15120, @andrewm4894)

- Add initial draft for the silencing docs (#15112, @hugovalente-pm)

- Create category overview pages for Learn restructure (#15091, @Ancairon)

- Mention waive off of space subscription price (#15082, @hugovalente-pm)

- Update Security doc (#15072, @tkatsoulas)

- Update netdata-security.md (#15068, @cakrit)

- Fix wording in interact with charts doc (#15040, @Ancairon)

- Fix wording in the database readme (#15034, @Ancairon)

- Update troubleshooting-agent-with-cloud-connection.md (#15029, @cakrit)

- Update the billing docs for the flow (#15014, @hugovalente-pm)

- Update chart documentation (#15010, @Ancairon)

Packaging / Installation

- Fix package conflicts policy on deb based packages (#15170, @tkatsoulas)

- Fix user and group handling in DEB packages (#15166, @Ferroin)

- Change mandatory packages for RPMs (#15165, @tkatsoulas)

- Restrict ebpf dep in DEB package to amd64 only (#15161, @Ferroin)

- Make plugin packages hard dependencies (#15160, @Ferroin)

- Update libbpf to v1.2.0 (#15038, @thiagoftsm)

- Provide necessary permission for the kickstart to run the netdata-updater script (#15132, @tkatsoulas)

- Fix bundling of eBPF legacy code for DEB packages (#15127, @Ferroin)

- Fix package versioning issues (#15125, @Ferroin)

- Fix handling of eBPF plugin for DEB packages (#15117, @Ferroin)

- Improve some of the error messages in the kickstart script (#15061, @Ferroin)

- Split plugins to individual packages for DEB/RPM packaging (#13927, @Ferroin)

- Update agent telemetry url to be cloud function instead of posthog (#15085, @andrewm4894)

- Remove Fedora 36 from CI and platform support. (#14938, @Ferroin)

- Fix a fatal in the claiming script when the main action is not claiming (#15039, @ilyam8)

- Remove old logic for handling of legacy stock config files (#14829, @Ferroin)

- Make zlib compulsory dep (#14928, @underhood)

- Replace JudyLTablesGen with generated files (#14984, @mochaaP)

- Update SQLITE to version 3.41.2 (#15031, @stelfrag)

Streaming

Health

- Fix cockroachdb alarms (#15095, @ilyam8)

- Use chart labels to filter alarms (#14982, @MrZammler)

- Remove "families" from alarm configs (#15086, @ilyam8)

Exporting

- Add chart labels to Prometheus exporter (#15099, @thiagoftsm)

- Fix out-of-order labels in Prometheus exporter (#15094, @thiagoftsm)

- Fix out-of-order labels in Prometheus remote write exporter (#15097, @thiagoftsm)

ML

- Update ML defaults to 24h (#15093, @andrewm4894)

Other Notable Changes

Improvements

- Reduce netdatacli size (#15024, @stelfrag)

- Make percentage-of-group aggregatable at cloud (#15126, @ktsaou)

- Add percentage calculation on grouped queries to /api/v2/data (#15100, @ktsaou)

- Add status information and streaming stats to /api/v2/nodes (#15162, @ktsaou)

Bug fixes

- Fix the units when returning percentage of a group (#15105, @ktsaou)

- Fix uninitialized array vh in percentage-of-group (#15106, @ktsaou)

- Fix not respecting maximum message size limit of MQTT server (#15009, @underhood)

- Fix not freeing context when establishing an ACLK connection (#15073, @stelfrag)

- Fix sanitizing square brackets in label value (#15131, @ilyam8)

- Fix crash when UUID is NULL in SQLite (#15147, @stelfrag)

Code organization

- Add initial minimal h2o webserver integration (#14585, @underhood)

- Release buffer in case of error -- CID 385075 (#15090, @stelfrag)

- Improve cleanup of health log table (#15045, @MrZammler)

- Simplify loop in alert checkpoint (#15065, @MrZammler)

- Only queue an alert to the cloud when it's inserted (#15110, @MrZammler)

- Generate, store and transmit a unique alert event_hash_id (#15111, @MrZammler)

- Fix syntax in config.ac (#15139, @underhood)

- Add library to encode/decode Gorilla compressed buffers. (#15128, @vkalintiris)

- Fix coverity issues (#15169, @stelfrag)

- Fix CID 385073 -- Uninitialized scalar variable (#15163, @stelfrag)

- Fix CodeQL warning (#15062, @stelfrag)

Deprecation notice

The following items will be removed in our next minor release (v1.41.0):

Patch releases (if any) will not be affected.

| Component | Type | Will be replaced by |

|---|---|---|

| python.d/nvidia_smi | collector | go.d/nvidia_smi |

family attribute |

alert configuration and Health API | chart labels attribute (more details on netdata#15030) |

Cloud recommended version

When using Netdata Cloud, the required agent version to take most benefits from the latest features is one version before the last stable.

On this release this will become v1.39.1 and you'll be notified and guided to take action on the UI if you are running agents on lower versions.

Check here for details on how to Update Netdata agents.

Netdata Release Meetup

Join the Netdata team on the 19th of June at 16:00 UTC for the Netdata Release Meetup.

Together we’ll cover:

- Release Highlights.

- Acknowledgements.

- Q&A with the community.

RSVP now - we look forward to meeting you.

Support options

As we grow, we stay committed to providing the best support ever seen from an open-source solution. Should you encounter an issue with any of the changes made in this release or any feature in the Netdata Agent, feel free to contact us through one of the following channels:

- Netdata Learn: Find documentation, guides, and reference material for monitoring and troubleshooting your systems with Netdata.

- GitHub Issues: Make use of the Netdata repository to report bugs or open a new feature request.

- GitHub Discussions: Join the conversation around the Netdata development process and be a part of it.

- Community Forums: Visit the Community Forums and contribute to the collaborative knowledge base.

- Discord Server: Jump into the Netdata Discord and hang out with like-minded sysadmins, DevOps, SREs, and other troubleshooters. More than 1400 engineers are already using it!

Running survey

Helps us make Netdata even greater! We are trying to gather valuable information that is key for us to better position Netdata and ensure we keep bringing more value to you.

We would appreciate if you could take some time to answer this short survey (4 questions only).

Published by netdatabot over 1 year ago

This patch release provides the following bug fixes:

-

We noticed that claiming and enabling auto-updates have been failing due to incorrect permissions when

kickstart.shwas doing a static installation. The issue has affected all static installations, including the one done from the Windows MSI installer. The permissions have now been corrected. -

The recipient lists of agent alert notifications are configurable via the

health_alarm_notify.conffile. A stock file with default configurations can be modified usingedit-config. @jamgregory noticed that the default settings in that file can make changing role recipients confusing. Unless the edited configuration file included every setting of the original stock file, the resulting behavior was unintuitive. @jamgregory kindly added a PR to fix the handling of custom role recipient configurations. -

A bug in our collection and reporting of Infiniband bandwidth was discovered and fixed.

-

We noticed memory buffer overflows under some very specific conditions. We adjusted the relevant buffers and the calls to

strncpyzto prevent such overflows. -

A memory leak in certain circumstances was found in the ACLK code. We fixed the the incorrect data handling that caused it.

-

An unrelated memory leak was discovered in the ACLK code and has also been fixed.

-

Exposing the anomaly rate right on top of each chart in Netdata Cloud surfaced an issue of bad ML models on some very noisy metrics. We addressed the issue by suppressing the indications that these noisy metrics would produce. This change gives the ML model a chance to improve, based on additional collected data.

-

Finally, we improved the handling of errors during ML transactions, so that transactions are properly rolled back, instead of failing in the middle.

Support options

As we grow, we stay committed to providing the best support ever seen from an open-source solution. Should you encounter

an issue with any of the changes made in this release or any feature in the Netdata Agent, feel free to contact us

through one of the following channels:

-

Netdata Learn: Find documentation, guides, and reference material for monitoring and

troubleshooting your systems with Netdata. -

GitHub Issues: Make use of the Netdata repository to report bugs or open

a new feature request. -

GitHub Discussions: Join the conversation around the Netdata

development process and be a part of it. -

Community Forums: Visit the Community Forums and contribute to the collaborative

knowledge base. -

Discord: Jump into the Netdata Discord and hangout with like-minded sysadmins,

DevOps, SREs and other troubleshooters. More than 1300 engineers are already using it!

Published by netdatabot over 1 year ago

- Netdata open-source growth

-

Release highlights

-

Netdata Charts v3.0

A new era for monitoring charts. Powerful, fast, easy to use. Instantly understand the dataset behind any chart. Slice, dice, filter and pivot the data in any way possible! -

Windows support

Windows hosts are now first-class citizens. You can now enjoy out-of-the-box monitoring of over 200 metrics from your Windows systems and the services that run on them. -

Virtual nodes and custom labels

You now have access to more monitoring superpowers for managing medium to large infrastructures. With custom labels and virtual hosts, you can easily organize your infrastructure and ensure that troubleshooting is more efficient. -

Major upcoming changes

Separate packages for data collection plugins, mandatoryzlib, no upgrades of existing installs from versions prior to v1.11. - Bar charts for functions

-

Opsgenie notifications for Business Plan users

Business plan users can now seamlessly integrate Netdata with their Atlassian Opsgenie alerting and on call management system.

-

Netdata Charts v3.0

-

Data Collection

- Containers and VMs CGROUPS

- Docker

- Kubernetes

- Kernel traces/metrics eBPF

- Disk Space Monitoring

- OS Provided Metrics proc.plugin

- PostgreSQL

- DNS Query

- HTTP endpoint check

- Elasticsearch and OpenSearch

- Dnsmasq DNS Forwarder

- Envoy

- Files and directories

- RabbitMQ

- charts.d.plugin

- Anomalies

- Generic structured data with Pandas

- Generic Prometheus collector

- Alerts and Notifications

- Visualizations / Charts and Dashboards

- Machine Learning

- Installation and Packaging

- Administration

- Documentation and Demos

- Deprecation notice

- Netdata Agent Release Meetup

- Support options

- Running survey

- Acknowledgements

Netdata open-source growth

- Over 62,000 GitHub Stars

- Over 1.5 million online nodes

- Almost 92 million sessions served

- Over 600 thousand total nodes in Netdata Cloud

Release highlights

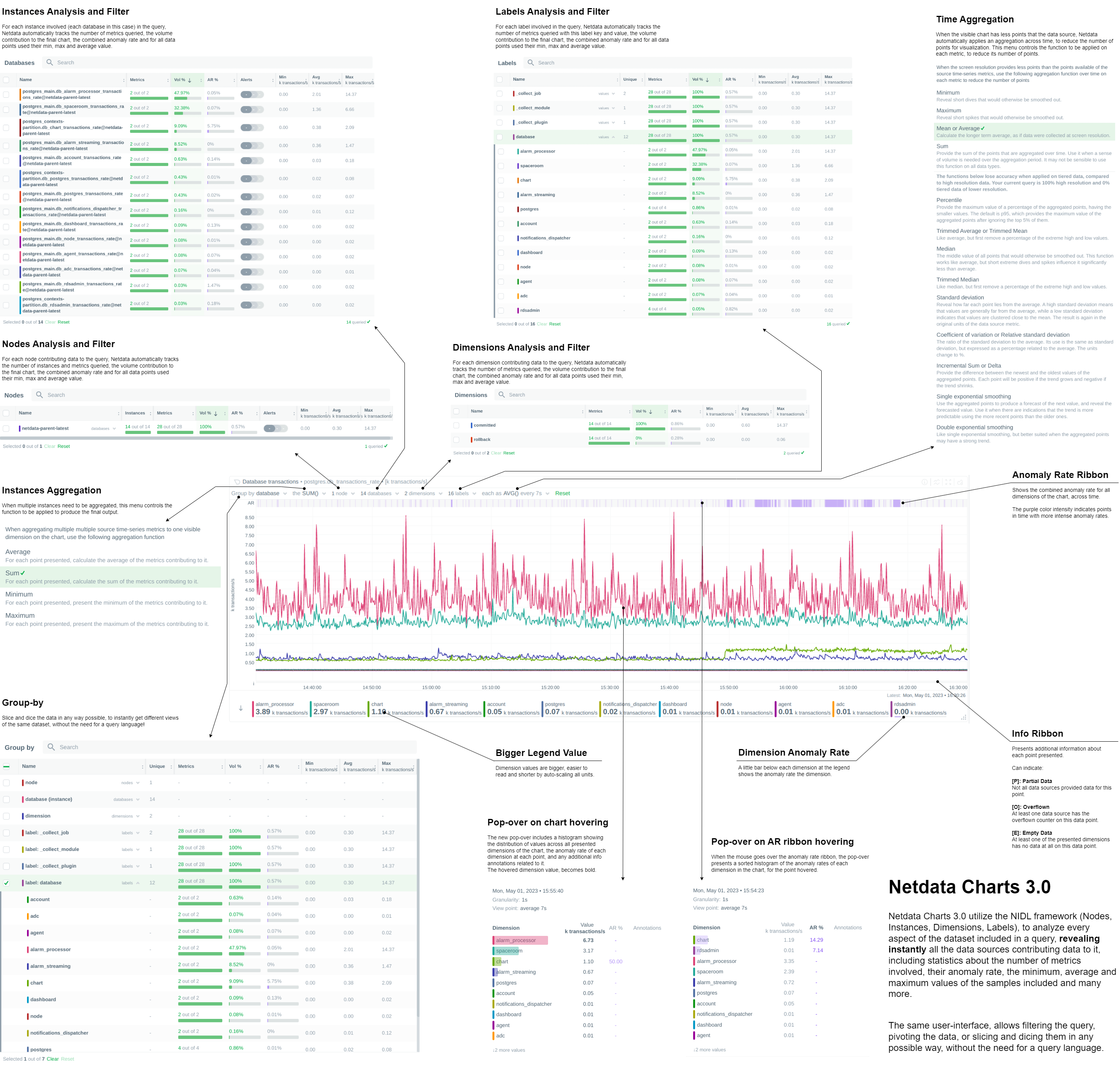

Netdata Charts v3.0

We are excited to announce Netdata Charts v3.0 and the NIDL framework. These are currently available at Netdata Cloud. At the next Netdata release, the agent dashboard will be replaced to also use the same charts.

One of the key obstacles in understanding an infrastructure and troubleshooting issues, is making sense of the data we see on charts. Most monitoring solutions assume that the users have a deep understanding of the underlying data, so during visualization they actually do nothing to help users comprehend the data easier or faster. The problem becomes even more apparent when the users troubleshooting infrastructure problems are the not the ones who developed the dashboards. In these cases all kinds of misunderstandings are possible, resulting in bad decisions and slower time to resolution.

To help users instantly understand and validate the data they see on charts, we developed the NIDL (Nodes, Instances, Dimensions, Labels) framework and we changed all the Netdata query engines, at both the agent and the cloud, to enrich the returned data with additional information. This information is then visualized on all charts.

Embedded Machine Learning for every metric

Netdata's unsupervised machine learning algorithm creates a unique model for each metric collected by your agents, using exclusively the metric's past data. We don't train ML models on a lab, or on aggregated sample data. We then use these unique models during data collection to predict the value that should be collected and check if the collected value is within the range of acceptable values based on past patterns and behavior. If the value collected is an outlier, we mark it as anomalous. This unmatched capability of real-time predictions as data is collected allows you to detect anomalies for potentially millions of metrics across your entire infrastructure within a second of occurrence.

Before this release, users had to either go to the "Anomalies" tab, or enable anomaly rate information from a button on the charts to access the anomaly rate. We found that this was not very helpful, since a lot of users were not aware of this functionality, or they were forgetting to check it. So, we decided that the best use of this information is to visualize it by default on all charts, so that users will instantly see if the AI algorithm in Netdata believes the values are not following past behavior.

In addition to the summarized tables and chart overlay, a new anomaly rate ribbon on top of each chart visualizes the combined anomaly rate of all the underlying data, highlighting areas of interest that may not be easily visible to the naked eye.

Hovering over the anomaly rate ribbon provides a histogram of the anomaly rates per dimension presented, for the specific point in time.

Anomaly rate visualization does not make Netdata slower. Anomaly rate is saved in the the Netdata database, together with metric values, and due to the smart design of Netdata, it does not even incur a disk footprint penalty.

Introducing chart annotations for comprehensive context

Chart annotations have arrived! When hovering over the chart, the overlay may display an indication in the "Info" column.

Currently, annotations are used to inform users of any data collection issues that might affect the chart. Below each chart, we added an information ribbon. This ribbon currently shows 3 states related to the points presented in the chart:

-

[P]: Partial Data

At least one of the dimensions in the chart has partial data, meaning that not all instances available contributed data to this point. This can happen when a container is stopped, or when a node is restarted. This indicator helps to gain confidence of the dataset, in situations when unusual spikes or dives appear due to infrastructure maintenance, or due to failures to part of the infrastructure. -

[O]: Overflowed

At least one of the datasources included in the chart was a counter that has overflowed exactly that point. -

[E]: Empty Data

At least one of the dimensions included in the chart has no data at all for the given points.

All these indicators are also visualized per dimension, in the pop-over that appears when hovering the chart.

New hover pop-over

Hovering over any point in the chart now reveals a more informative overlay. This includes a bar indicating the volume percentage of each time series compared to the total, the anomaly rate, and a notification if there are data collection issues (annotations from the info ribbon).

The pop-over sorts all dimensions by value, makes bold the closest dimension to the mouse and presents a histogram based on the values of the dimensions.

When hovering the anomaly ribbon, the pop-over sorts all dimensions by anomaly rate, and presents a histogram of these anomaly rates.

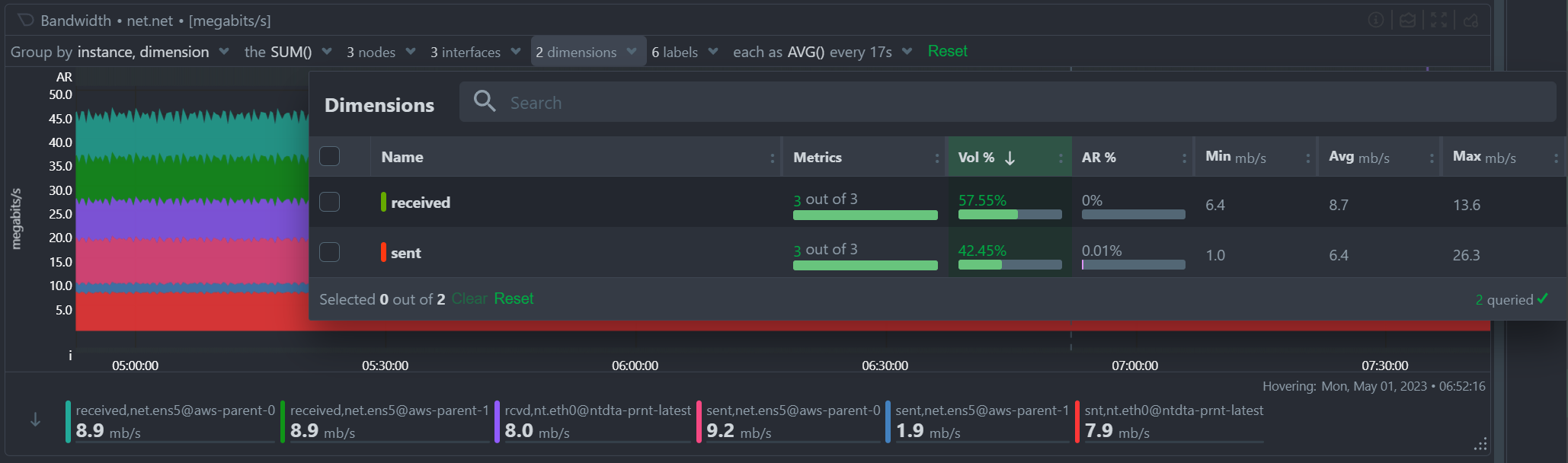

NIDL framework

You can now rapidly access condensed information for collected metrics, grouped by node, monitored instance, dimension, or any label key/value pair. Above all charts, there are a few drop-down menus. These drop-down menus have 2 functions:

- Provide additional information about the visualized chart, to help us understand the data we see.

- Provide filtering and grouping capabilities, altering the query on the fly, to help us get different views of the dataset.

In this release, we extended the query engines of Netdata (both at the agent and the cloud), to include comprehensive statistical data to help us understand what we see on the chart. We developed the NIDL framework to standardize this presentation across all charts.

The NIDL framework attaches the following metadata to every metric we collect:

- The Node each metric comes from

- The Instance each metric belongs to. An instance can be container, a disk, a network interface, a database server, a table in a given data server, etc. The instance describes which exactly component of our infrastructure we monitor. At the charts, we replaced the word "instance" with the proper name of that instance. So, when the instance is a disk, we see "disks". When it is a container, we see "containers", etc.

- The Dimensions are the individual metrics related to an instance under a specific context.

- The Labels are all the labels that are available for each metric, that many or may not be related to the node or the instance of them metric.

Since all our metrics now have these metadata, we are use them at query time, to provide for each of them the following consolidated data for the visible time frame:

- The volume contribution of each of them into the final query. So even if a query comes from 1000 nodes, we can instantly see the contribution of each node in the result. The same for instances, dimensions and labels. Especially for labels, Netdata also provides the volume contribution of each label

key:valuepair to the final query, so that we can immediately see for all label values involved in the query how much they affected the chart. - The anomaly rate of each of them for the time-frame of the query. This is used to quickly spot which of the nodes, instances, dimensions or labels have anomalies in the requested time-frame.

- The minimum, average and maximum values of all the points used for the query. This is used to quickly spot which of the nodes, instances, dimensions or labels are responsible for a spike or a dive in the chart.

All of these drop-down menus can now be used for instantly filtering the dataset, by including or excluding specific nodes, instances, dimensions or labels. Directly from the drop-down menu, without the need to edit a query string and without any additional knowledge of the underlying data.

Multiple Group-by

At the same time, the new query engine of Netdata has been enhanced to support multiple group-by at once. The "Group by" drop-down menu allows selecting 1 or more groupings to be applied at once on the same dataset. Currently it supports:

- Group by Node, to summarize the data of each node, and provide one dimension on the chart for each of the nodes involved. Filtering nodes is supported at the same time, using the nodes drop-down menu.

- Group by Instance, to summarize the data of each instance and provide one dimension on the chart for each of the instances involved. Filtering instances is supported at the same time, using the instances drop-down menu.

- Group by Dimension

- Group by Label, to summarize the data for each label value. Multiple label keys can be selected at the same time.

Using this menu, you can slice and dice the data in any possible way, to quickly get different views of them, without the need to edit a query string and without any need to better understand the format of the underlying data. Netdata will do its by itself.

Windows support

We are excited to announce that our Windows monitoring capabilities have been greatly improved with the addition of over 170 new system, network, and application metrics. This includes out-of-the-box support for MS Exchange, MS SQL, IIS, Active Directory (including AD Certificate and AD Federation Services).

To try out Netdata directly on your Windows machine, our .msi installer allows for quick and easy installation with a Netdata WSL distribution. However, for production deployments, one or more Linux nodes are still required to run Netdata and store your metrics, as shown in the provided diagram.

To fully support this architecture, we have added the ability to declare each Windows host as a Netdata node. You can learn more about this feature in the virtual nodes section.

For more information, please check out our high-level introduction to

Windows monitoring, our demo, or our Windows collector documentation.

Virtual nodes and custom labels

Netdata provides powerful tools for organizing hundreds of thousands of metrics collected every second in large infrastructures. From the automated organization into sections of related out-of-the-box aggregate charts, to concepts like spaces and war rooms that connect the metrics with the people who need to use them, scale is no problem. Easily slicing and dicing the metrics via grouping and filtering in our charts is also essential for exploration and troubleshooting, which is why we in the past we introduced host labels and default metric labels. To complete the available tool set, Netdata now offers the ability to define custom metric labels and virtual nodes. You can read how everything fits together in our documentation.

You can use custom labels to group and filter metrics in the Netdata Cloud aggregate charts. Virtual nodes work like normal Netdata Cloud nodes for the metrics you assign to them and can be added to any room.

The ability to define a virtual node is a new feature that is essential for monitoring remote Windows hosts, but has many other potential uses. For example, you may have a central monitoring node collecting data from many remote database hosts that you aren't allowed to install software on. You may also use the HTTP endpoint collector to check the availability and latency of APIs on multiple remote endpoints.

Defining virtual nodes lets you substantiate those entities that have no Netdata running on them, so they can appear in Netdata Cloud, be placed in rooms, filtered and grouped easily, and have their virtual node name displayed in alerts. Learn how to configure virtual nodes for any go.d.plugin data collection job.

Major upcoming changes

Please read carefully through the following planned changes in our packaging, support of existing installs and required dependencies, as they may impact you. We are committed to providing the most up-to-date and reliable software, and we believe that the changes outlined below will help us achieve this goal more efficiently. As always, we are happy to provide any assistance needed during this transition.

Upcoming collector packaging changes

As previously discussed on our blog, we will be changing how we package our external data collection plugins in the coming weeks. This change will be reflected in nightly builds a few weeks after this release, and in stable releases starting with v1.40.0. Please note that any patch releases for v1.39.0 will not include this change.

For detailed information on this change and how it may impact you, please refer to our blog post titled Upcoming Changes to Plugins in Native Packages.

Upcoming end of support for upgrading very old installs

Beginning shortly after this release, we will no longer be providing support for upgrading existing installs from versions prior to Netdata v1.11.0. It is highly unlikely that this change will affect any existing users, as v1.11.0 was released in 2018. However, this change is important in the long-term, as it will allow us to make our installer and updater code more portable.

Upcoming mandatory dependency on zlib

In the near future, we will be making a significant change to the Netdata agent by making zlib a mandatory dependency. Although we have not treated it as a mandatory dependency in the past, a number of features that we consider core parts of the agent rely on zlib. Given that zlib is ubiquitous across almost every platform, there is little to no benefit to it being an optional dependency. As such, this change is unlikely to have a significant impact on the vast majority of our users.

The change will be implemented in nightly builds shortly after this release and in stable releases starting with v1.40.0. Please note that any patch releases for v1.39.0 will not include this change.

Bar charts for functions

In v1.38, we introduced real-time functions that enable you to trigger specific routines to be executed by a given Agent on demand. Our initial function provided detailed information on currently running processes on the node, effectively replacing top and iotop.

We have now expanded the versatility of functions by incorporating configurable bar charts above the table displaying detailed data. These charts will be a standard feature in all future functions, granting you the ability to manipulate and analyze the retrieved data as needed.

Opsgenie notifications for Business Plan users

Ensuring the reliable delivery of alert notifications is crucial for maintaining the reliability of your services. While individual Netdata agents were already able to send alert notifications to Atlassian's Opsgenie, Netdata Cloud adds centralized control and more robust retry and failure handling mechanisms to improve the reliability of the notification delivery process.

Business Plan users can now configure Netdata Cloud to send alert notifications to their Atlassian Opsgenie platform, using our centralized alert dispatching feature. This feature helps to ensure the reliable delivery of notifications, even in cases where individual agents are offline or experiencing issues.

We are committed to continually extending the capabilities of Netdata Cloud, and our focus on centralized alert dispatching is just one example of this. By adding more centralized dispatching options, we can further increase the reliability of notification delivery and help our users maintain the highest levels of service reliability possible.

Data Collection

Containers and VMs (CGROUPS)

The cgroups plugin reads information on Linux Control Groups to monitor containers, virtual machines and systemd services.

Previously, we identified individual Docker containers solely through their container ID, which may not always provide adequate information to identify potential issues with your infrastructure. However, we've made significant improvements to our system by incorporating labels containing the image and the name of each container to all the collected metrics. These features allows you to group and filter the containers in a more efficient and effective manner, enabling you to quickly pinpoint and troubleshoot any issues that may arise

We always strive to provide the most informative chart titles and descriptions. The title of all our container CPU usage charts explain that 100% utilization means 1 CPU core, which also means you can exceed 100% when you add the utilization of multiple cores. This logic is a bit foreign to Kubernetes monitoring, where mCPU is clearer. So we modified the chart title to state that 100% utilization is equivalent to 1000 mCPUs.

We place great importance on delivering the most informative chart titles and descriptions to our users. Our container CPU usage charts are no exception. We understand that the concept of 100% CPU utilization equating to 1 CPU core, and the ability to exceed 100% by adding the utilization of multiple cores may seem a bit unfamiliar to those using Kubernetes monitoring. In light of this, we have taken steps to modify our chart title by incorporating mCPU, which provides greater clarity. The title now indicates that 100% utilization equates to 1000 mCPUs in k8s. We hope this change will help you better understand and interpret our container CPU usage charts.

Docker

Netdata monitors the Docker engine to automatically generate charts for container health and state, and image size and state.

Previously, this collector only retrieved aggregate metrics for the containers managed by the Docker engine. We started a major change

in the way we collect metrics from Docker so that we can now present the health of each container separately, or grouped by the container name and image labels. Some teething issues with this change were fixed quickly with #1160.

We recently increased the client version of our collector, which started causing issues with older Docker engine servers. We resolved these issues by adding client version negotiation to our Docker collector.

Kubernetes

Monitoring Kubernetes clusters can be challenging due to the intricate nature of the infrastructure. Identifying crucial aspects to monitor necessitates considerable expertise, which Netdata provides out-of-the-box through dedicated collectors for every layer of your Kubernetes infrastructure.

One key area to keep an eye on is the overall cluster state, which we address using the Kubernetes Cluster State Collector. This collector generates automated dashboards for 37 metrics encompassing overall node and pod resource limits and allocations, as well as pod and container readiness, health, and container restarts. Initially, we displayed the rate of container restarts, as we did with numerous other events. However, restarts are infrequent occurrences in many infrastructures. Displaying the rate of sparse events can lead to suboptimal charts for troubleshooting purposes. To address this, we have modified the logic and now present the absolute count of container restarts for enhanced clarity.

Kubernetes monitoring also relies on the cgroups plugin for container and pod monitoring. To properly label k8s containers, the cgroup plugin makes calls to the k8s API server to retrieve pod metadata. In large clusters and under certain conditions (e.g. starting all the agents at once), these requests can potentially cause serious stress on the API server, or even a denial of service incident. To address this issue we have provided an alternative to querying the API server. We now allow querying the local kubelet server for the same information. However, since the Kubelet's /pods endpoint is not well documented and should probably not be relied on (see 1, 2), we still query the API server by default. To switch to querying Kubelet, you can set the child.podsMetadata.useKubelet and child.podsMetadata.kubeletUrl variables that were added to our Helm chart.

Kernel traces/metrics (eBPF)

The eBPF Collector offers numerous eBPF programs to assist you in troubleshooting and analyzing how applications interact with the Linux kernel. By utilizing tracepoints, trampoline, and kprobes, we gather a wide range of valuable data about the host that would otherwise be unattainable.

We recently addressed some significant issues with SIGABRT crashes on some systems. These crashes were caused by problems with memory allocation and deallocation functions, which resulted in unstable system behavior and prevented users from effectively monitoring their systems. To resolve these issues, we made some significant changes to our memory allocation and deallocation functions. Specifically, we replaced these functions with more reliable alternatives and began using vector allocation where possible.

We later identified issues with memory corruption, Oracle Linux ported codes and OOMKill, which were all resolved with #14869.

Finally, issues with CPU usage on EC2 instances appeared in a nightly release and were resolved with some changes that speed up the plugin clean up process and also prevent some possible SIGABRT and SIGSEGV crashes.

These changes helped to reduce the likelihood of crashes occurring and improved the overall stability and reliability of the eBPF collector.

In some environments, the collector demanded substantial memory resources. To address this, we introduced charts to monitor its memory usage and implemented initial optimizations to decrease the RAM requirements. We will continue this work in future releases, to bring you even

more eBPF observability superpowers, with minimal resource needs.

Disk Space Monitoring

The disk space plugin is designed to monitor disk space usage and inode usage for mounted disks in Linux. However, because msdos/FAT file systems don't use inodes, the plugin would often generate false positives, leading to inaccurate results. To fix this, we've disabled inode data collection for these file systems, using the exclude inode metrics on filesystems configuration option. This option has a default value of msdosfs msdos vfat overlayfs aufs* *unionfs.

OS Provided Metrics (proc.plugin)

Our proc plugin is responsible for gathering system metrics from various endpoints, including /proc and /sys folders in Linux systems. It is an essential part of our monitoring tool, providing insights into system performance.

When running the Netdata agent in a Docker container, we encountered an issue where zram memory metrics were not being displayed. To solve this, we made changes to the zram collector code, respecting the /host prefix added to the directories mounted from the host to the container. Now, our monitoring tool can collect zram memory metrics even when running in a Docker container.

We also improved the zfs storage pool monitoring code, by adding the state suspended to the list of monitored states.

Finally, we added new metrics for BTRFS commits and device errors.

PostgreSQL

Our PostgreSQL collector is a highly advanced application collector, offering 70 out-of-the-box charts and 14 alerts to help users monitor their PostgreSQL databases with ease.

We recently discovered an issue in our documentation where we were instructing users to create a netdata user, even though our data collection job was using the postgres user. To address this issue, we have now added the netdata user as an additional option to our data collection jobs. With this enhancement, users can now use either the postgres user or the newly added netdata user to collect data from their PostgreSQL databases, ensuring a more seamless and accurate monitoring experience.

Netdata automatically generates several charts for PostreSQL write-ahead logs (WAL). We recently discovered that

wal_files_count, wal_archiving_files_count and replication_slot_files_count require superuser access, so we

added a check on whether the collection job has superuser access, before

attempting to collect these WAL metrics.

Finally, we fixed a bug with the bloat size calculation that used to erroneously return zeroes for some indexes.

DNS Query

The DNS query collector is a crucial tool that ensures optimal system performance by monitoring the liveness and latency of DNS queries. This tool is simple yet essential, as it attempts to resolve any hostname you provide and creates metrics for the response time and success or failure of each request/response.

Previously, we only measured the response time for successful queries. However, we have now enhanced the DNS query collector by collecting latency data for failed queries as well. This improvement enables us to identify and troubleshoot DNS errors more effectively, which ultimately leads to improved system reliability and performance.

HTTP endpoint check

Modern endpoint monitoring should include periodic checks on all your internal and public web applications, regardless of their traffic patterns. Automated and continuous tests can proactively identify issues, allowing them to be resolved before any users are affected.

Netdata's HTTP endpoint collector is a powerful tool that enables users to monitor the response status, latency, and content of any URL provided. While the collector has always supported basic authentication via a provided username and password, we have recently introduced a new enhancement that allows for more complex authentication flows. With the addition of the ability to include a cookie in the request, users can now authenticate and monitor more advanced applications, ensuring more comprehensive and accurate monitoring capabilities.

All you need to do is to add cookie: <filename> to your data collection job and the collector will issue the request will the contents of that file.

Elasticsearch and OpenSearch

Our Elasticsearch Collector seamlessly generates visualizations for 47 metrics, drawing from 4 endpoints of the renowned search engine.

The original Elasticsearch project evolved into an open-source initiative called OpenSearch, spearheaded by Amazon. However, our collector did not automatically connect to OpenSearch instances due to their default security settings with TLS and authentication.

Although it is possible to disable security by adding plugins.security.disabled: true to /etc/opensearch/opensearch.yml, which allows the default data collection job to function, we deemed it more prudent to introduce an OpenSearch-specific data collection job. This addition explicitly enables TLS and highlights the necessity of a username and password for secure access.

Dnsmasq DNS Forwarder

Dnsmasq is a lightweight and easy-to-configure DNS forwarder that is specifically designed to offer DNS, DHCP, and TFTP services to small-scale networks. Netdata provides comprehensive monitoring of Dnsmasq by collecting metrics for both the DHCP server and DNS forwarder.

Recently, we made a minor but important improvement to the order in which the DNS forwarder cache charts are displayed. With this update, the most critical information regarding cache utilization is now presented first, providing users with more efficient access to essential data. By constantly improving and refining our monitoring capabilities, we aim to provide our users with the most accurate and useful insights into their network performance.

Envoy

Envoy is an L7 proxy and communication bus designed for large modern service oriented architectures.

Our new Envoy collector automatically generates charts for over 50 metrics.

Files and directories

The files and directories collector monitors existence, last update and size of any files or directories you specify.

The collector was not sanitizing file and directory names, causing issues with metric collection. The issue was specific to paths with

spaces in them and is now fixed.

RabbitMQ

The Netdata agent includes a RabbitMQ collector that tracks the performance of this open-source message broker. This collector queries RabbitMQ's HTTP endpoints, including overview, node, and vhosts, to provide you with detailed metrics on your RabbitMQ instance. Recently, we fixed an issue that prevented our collector from properly collecting metrics on 32-bit systems.

charts.d.plugin

The charts.d plugin is an external plugin for Netdata. It's responsible for orchestrating data collection modules written in BASH v4+ to gather and visualize metrics.

Recently, we fixed an issue with the plugin's restarts that sometimes caused the connection to Netdata to be lost. Specifically, there was a chance for charts.d processes to die at the exact same time when the Netdata binary tried to read from them using fgets. This caused Netdata to hang, as fgets never returned. To fix this issue, we added a "last will" EOF to the exit process of the plugin. This ensures that the fgets call has something to receive before the plugin exits, preventing Netdata from hanging.

With this issue resolved, the charts.d plugin can now continue to provide seamless data collection and visualization for your Netdata instance without any disruptions.

Anomalies

Our anomaly collector is a powerful tool that uses the PyOD library in Python to perform unsupervised anomaly detection on your Netdata metrics. With this collector, you can easily identify unusual patterns in your data that might indicate issues with your system or applications.

Recently, we discovered an issue with the collector's Python version check. Specifically, the check was incorrectly rejecting Python 3.10 and higher versions due to how the float() function was casting "10" to "1". This resulted in an inaccurate check that prevented some users from using the anomaly collector with the latest versions of Python.

To resolve this issue, we fixed the Python version check to work properly with Python 3.10 and above. With this fix in place, all users can now take advantage of the anomaly collector's powerful anomaly detection capabilities regardless of the version of Python they are using.

Generic structured data (Pandas)

Pandas is a de-facto standard in reading and processing most types of structured data in Python. If you have metrics appearing in a CSV, JSON, XML, HTML, or other supported format,

either locally or via some HTTP endpoint, you can easily ingest and present those metrics in Netdata, by leveraging the Pandas collector. We fixed an issue we had logging some collector errors.

Generic Prometheus collector

Our Generic Prometheus Collector gathers metrics from any Prometheus endpoint that uses the OpenMetrics exposition format.

In version 1.38, we made some significant changes to how we generate charts with labels per label set. These changes resulted in a drastic increase in the length of generated chart IDs, which posed some challenges for users with a large number of label key/value pairs. In some cases, the length of the type.id` string could easily exceed the previous limit of 200 characters, which prevented users from effectively monitoring their systems.

To resolve this issue, we took action to increase the chart ID limit from 200 to 1000 characters. This change provides you with more flexibility when it comes to labeling their charts and ensures that you can effectively monitor their systems regardless of the number of label key/value pairs you use.

Alerts and Notifications

Notifications

Improved email alert notifications

We recently made some significant improvements to our email notification templates. These changes include adding the chart context, Space name, and War Room(s) with navigation links. We also updated the way the subject is built to ensure it's consistent with our other templates.

These improvements help to provide users with more context around their alert notifications, making it easier to quickly understand the nature of the issue and take appropriate action. By including chart context, Space name, and War Room(s) information, users can more easily identify the source of the problem and coordinate a response with their team members.

Receive only notifications for unreachable nodes

We've also enhanced our personal notification level settings to include an "Unreachable only" option. This option allows you to receive only reachability notifications for nodes disconnected from Netdata cloud. Previously this capability was only available combined with "All alerts". With this enhancement, you can now further customize you notification settings to more effectively manage your alerts and reduce notification fatigue.

ntfy agent alert notifications

The Netdata agent can now send alerts to ntfy servers. ntfy (pronounced "notify") is a simple HTTP-based pub-sub notification service. It allows you to send notifications to your phone or desktop via scripts from any computer, entirely without sign-up, cost or setup. It's also open source if you want to run your own server.

You can learn how to send ntfy alert notifications from a Netdata agent in our documentation.

Enhanced Real-Time Alert Synchronization on Netdata Cloud

Cloud to manage millions of alert state transitions daily. These transitions are transmitted from each connected agent through the agent-Cloud Link (ACLK). As with any communication channel, occasional data loss is unavoidable. Therefore, swiftly detecting missing transitions and reconciling discrepancies is crucial for maintaining real-time observability, regardless of scale.

We are thrilled to introduce a significant enhancement to our alert synchronization protocol between Netdata Agents and Netdata Cloud. This upgrade ensures faster transmission of alert states and prompt resolution of any temporary inconsistencies.

In the past, whenever a state transition change occurred, a message with a sequencing number was sent from the Agent to the Cloud. This method resulted in numerous read/write operations, generating excessive load on our Alerts database in the Cloud. Furthermore, it assumed that all messages had to be processed sequentially, imposing unnecessary constraints and restricting our scaling options for message brokers.

Our revamped protocol implements a far more efficient method. Instead of relying on sequencing numbers, we now use a checksum value calculated by both the Cloud and the Agent to verify synchronization. This approach not only lessens the burden on our Alerts database but also eliminates the dependency on sequential message processing, permitting out-of-order message delivery.

The enhanced synchronization and scaling capabilities allow us to address certain edge cases where users experienced out-of-sync alerts on the Cloud. Consequently, we can now deliver a superior service to our users.

Visualizations / Charts and Dashboards

Events Feed

We're committed to continually improving our [Events Feed](https://learn.netdata.cloud/docs/troubleshooting-and-machine-learning events-feed), which we introduced in version 1.38. We've made several user experience (UX) improvements to make the Events Feed even more useful for troubleshooting purposes.

One of the key improvements we made was the addition of a bar chart showing the distribution of events over time. This chart helps users quickly identify interesting time periods to focus on during troubleshooting. By visualizing the distribution of events across time, users can more easily spot patterns or trends that may be relevant to their troubleshooting efforts.

These improvements help to make the Events Feed an even more valuable tool, helping you troubleshoot issues more quickly and effectively. We will continue to explore ways to enhance the Events Feed and other features of our monitoring tool to provide the best possible user experience.

Machine Learning

As part of our Machine Learning Roadmap we have been working to persist trained models to the db so that the models used in Netdata's native anomaly detection capabilities will not be lost on restarts and instead be persisted to the database. This is an important step on the way to extending the ML defaults to train on the last 24 hours by default in the near future (as discussed more in this blog post). This will help improve anomaly detection performance, reducing false positives and making anomaly rates more robust to system and netdata restarts where previously models would need to be fully re-trained.

This is an area of quite active development right now and there are still a few more pieces of work to be done in coming releases. If interested you can follow along with any area/ML issues in netdata/netdata-cloud or netdata/netdata and check out active PR's here.

Installation and Packaging

Improved Linux compatibility

We have updated the bundled version of makeself used to create static builds, which was almost six years out of date, to sync it with the latest upstream release. This update should significantly improve compatibility on more exotic Linux systems.

We have also updated the metadata embedded in the archive to better reflect the current state of the project. This ensures that the project is up to date and accurately represented, providing users with the most relevant and useful information.

You can find more details about these updates in our Github repository.

Administration

New way to retrieve netdata.conf

Previously, the only way to get a default netdata.conf file was to start the agent and query the /netdata.conf API endpoint. This worked well enough for checking the effective configuration of a running agent, but it also meant that edit-config netdata.conf didn't work as users expect, if there is no netdata.conf file. It also meant that you couldn't check the effective configuration if you have the web server disabled.

We have now added the netdatacli dumpconfig command, which outputs the current

netdata.conf, exactly like the web API endpoint does. In the future we will look into making the edit-config command a bit smarter,

so that it can provide the option to automatically retrieve the live netdata.conf.

Documentation and Demos

We're excited to announce the completion of a radical overhaul of our documentation site, available at learn.netdata.cloud. Our new site features a much clearer organization of content, a streamlined publishing process, and a powerful Google search bar that searches all available resources for articles matching your queries.

We've restructured and improved dozens of articles, updating or eliminating obsolete content and deduplicating similar or identical content. These changes help to ensure that our documentation remains up-to-date and easy to navigate.

Even seasoned Netdata power users should take a look at our new Deployment in Production section, which includes features and suggestions that you may have missed in the past.

We're committed to maintaining the highest standards for our documentation and invite our users to assist us in this effort. The "Edit this page" button, available on all published articles, allows you to suggest updates or improvements by directly editing the source file.

We hope that our new documentation site helps you more effectively use and understand our monitoring tool, and we'll continue to make improvements and updates based on your feedback.

Deprecation notice

The following items will be removed in our next minor release (v1.40.0):

Patch releases (if any) will not be affected.

| Component | Type | Will be replaced by |

|---|---|---|

| python.d/nvidia_smi | collector | go.d/nvidia_smi |

Deprecated in this release

In accordance with our previous deprecation notice, the following items have been removed in this release:

| Component | Type | Replaced by |

|---|---|---|

| python.d/ntpd | collector | go.d/ntpd |

| python.d/proxysql | collector | go.d/proxysql |

| python.d/rabbitmq | collector | go.d/rabbitmq |

Netdata Release Meetup

Join the Netdata team on the 9th of May, at 16:00 UTC for the Netdata Release Meetup.

Together we’ll cover:

- Release Highlights.

- Acknowledgements.

- Q&A with the community.

RSVP now - we look forward to meeting you.

Support options

As we grow, we stay committed to providing the best support ever seen from an open-source solution. Should you encounter an issue with any of the changes made in this release or any feature in the Netdata Agent, feel free to contact us through one of the following channels:

- Netdata Learn: Find documentation, guides, and reference material for monitoring and troubleshooting your systems with Netdata.

- GitHub Issues: Make use of the Netdata repository to report bugs or open a new feature request.

- GitHub Discussions: Join the conversation around the Netdata development process and be a part of it.

- Community Forums: Visit the Community Forums and contribute to the collaborative knowledge base.

- Discord Server: Jump into the Netdata Discord and hang out with like-minded sysadmins, DevOps, SREs, and other troubleshooters. More than 1400 engineers are already using it!

Running survey

Helps us make Netdata even greater! We are trying to gather valuable information that is key for us to better position Netdata and ensure we keep bringing more value to you.

We would appreciate if you could take some time to answer this short survey (4 questions only).

Acknowledgements

We would like to thank our dedicated, talented contributors that make up this amazing community. The time and expertise that you volunteer are essential to our success. We thank you and look forward to continuing to grow together to build a remarkable product.

- @farax4de for fixing an exception in the CEPH collector.

- @ghanapunq for adding ethtool to the list of third-party collectors.

- @intelfx for fixing the accounting of Btrfs unallocated space in the proc plugin.

- @k0ste for fixing an issue with x86_64 RPM builds with eBPF.

- @slavox for fixing a typo in the documentation.

-

@vobruba-martin for fixing the

--release-channeland--nightly-channeloptions inkickstart.sh. - @bompus for fixing an issue preventing non-interactive installs of our static builds from fully automatic.

- @D34DC3N73R for adding Docker instructions to enable NVIDIA GPUs

Published by netdatabot over 1 year ago

The first patch release for v1.38 updates the version of OpenSSL included in our static builds

and Docker images to v1.1.1t, to resolve a few moderate security vulnerabilities in v1.1.1n.

The patch also includes the following minor bug fixes:

-

We fixed the handling of dimensions with no data in a specific timeframe.

When the metrics registry recorded a dimension as present in a specific timeframe, but the dimension did not have any data for that timeframe,

the query engine would return random data that happened to be in memory. - We fixed occasional crashes during shutdown when not using eBPF.

- We fixed the systemd service file handling on systems using a systemd version older than v235.

- We fixed build failures on FreeBSD 14 release candidates, FreeBSD < 13.1, and environments with Linux kernel version < 5.11.

Support options

As we grow, we stay committed to providing the best support ever seen from an open-source solution. Should you encounter

an issue with any of the changes made in this release or any feature in the Netdata Agent, feel free to contact us

through one of the following channels:

-

Netdata Learn: Find documentation, guides, and reference material for monitoring and

troubleshooting your systems with Netdata. -

GitHub Issues: Make use of the Netdata repository to report bugs or open

a new feature request. -

GitHub Discussions: Join the conversation around the Netdata

development process and be a part of it. -

Community Forums: Visit the Community Forums and contribute to the collaborative

knowledge base. -

Discord: Jump into the Netdata Discord and hangout with like-minded sysadmins,

DevOps, SREs and other troubleshooters. More than 1300 engineers are already using it!

Published by netdatabot over 1 year ago

-

-

DBENGINE v2

The new open-source database engine for Netdata Agents, offering huge performance, scalability and stability improvements, with a fraction of memory footprint! -

FUNCTION: Processes

Netdata beyond metrics! We added the ability for runtime functions, that can be implemented by any data collection plugin, to offer unlimited visibility to anything, even not-metrics, that can be valuable while troubleshooting. -

Events Feed

Centralized view of Space and Infrastructure level events about topology changes and alerts. -

NOTIFICATIONS: Slack, PagerDuty, Discord, Webhooks

Netdata Cloud now supports Slack, PagerDuty, Discord, Webhooks. -

Role-based access model

Netdata Cloud supports more roles, offering finer control over access to infrastructure.

-

-

Integrations

New and improved plugins for data collection, alert notifications, and data exporters. -

Health Monitoring and Alerts Notification Engine

Changes to the Netdata Health Monitoring and Notifications engine.

❗We are keeping our codebase healthy by removing features that are end-of-life. Read the deprecation notice to check if you are affected.

Netdata open-source growth

- Almost 62,000 GitHub Stars

- Over four million monitored servers

- Almost 88 million sessions served

- Over 600 thousand total nodes in Netdata Cloud

Release highlights

Dramatic performance and stability improvements, with a smaller agent footprint

We completely reworked our custom-made, time series database (dbengine), resulting in stunning improvements to performance, scalability, and stability, while at the same time significantly reducing the agent memory requirements.

On production-grade hardware (e.g. 48 threads, 32GB ram) Netdata Agent Parents can easily collect 2 million points/second while servicing data queries for 10 million points / second, and running ML training and Health querying 1 million points / second each!

For standalone installations, the 64bit version of Netdata runs stable at about 150MB RAM (Reside Set Size + SHARED), with everything enabled (the 32bit version at about 80MB RAM, again with everything enabled).

Key highlights of the new dbengine

Disk based indexing

We introduced a new journal file format (*.jnfv2) that is way faster to initialize during loading. This file is used as a disk-based index for all metric data available on disk (metrics retention), reducing the memory requirements of dbengine by about 80%.

New caching

3 new caches (main cache, open journal cache, extent cache) have been added to speed up queries and control the memory footprint of dbengine.

These caches combined, offer excellent caching even for the most demanding queries. Cache hit ratio now rarely falls bellow 50%, while for the most common use cases, it is constantly above 90%.

The 3 caches support memory ballooning and autoconfigure themselves, so they don't require any user configuration in netdata.conf.

At the same time, their memory footprint is predictable: twice the memory of the currently collected metrics, across all tiers. The exact equation is:

METRICS x 4KB x (TIERS - 1) x 2 + 32MB

Where:

-

METRICS x 4KB x TIERSis the size of the concurrently collected metrics. -

4KBis the page size for each metric. -

TIERSis whatever configured for[db].storage tiersinnetdata.conf; use(TIERS - 1)when using 3 tiers or more (3 is the default). -

x 2 + 32MBis the commitment of the new dbengine.

The new combination of caches makes Netdata memory footprint independent of retention! The amount of metric data on disk, does not any longer affect the memory footprint of Netdata, it can be just a few MB, or even hundreds of GB!

The caches try to keep the memory footprint at 97% of the predefined size (i.e. twice the concurrently collected metrics size). They automatically enter a survival mode when memory goes above this, by paralleling LRU evictions and metric data flushing (saving to disk). This system has 3 distinct levels of operation:

- aggressive evictions, when caches are above 99% full; in this mode cache query threads are turned into page evictors, trying to remove the least used data from the caches.

- critical evictions, when caches are above 101% full; in this mode every thread that accesses the cache is turned into a batch evictor, not leaving the cache until the cache size is again within acceptable limits.

- flushing critical, when too many unsaved data reside in memory; in this mode, flushing is parallelized, trying to push data to disk as soon as possible.

The caches are now shared across all dbengine instances (all tiers).

LRU evictions are now smarter: the caches know when metrics are referenced by queries or by collectors and favor the ones that have been used recently by data queries.

New dbengine query engine

The new dbengine query engine is totally asynchronous, working in parallel while other threads are processing metrics points. Chart and Context queries, but also Replication queries, now take advantage of this feature and ask dbengine to preload metric data in advance, before they are actually needed. This makes Netdata amazingly fast to respond in data queries, even on busy parent that at the same time collect millions of points.

At the same time we support prioritization of queries based on their nature:

- High priority queries, are all those that can potentially block data collection. Such queries are tiers backfilling and the last replication query for each metric (immediately after which, streaming is enabled).

- Normal priority queries, are the ones that are initiated by users.

- Low priority queries, are the ones that can be delayed without affecting quality of the results, like Health and Replication queries.

- Best effort queries, are the lowest priority ones and are currently used by ML training queries.

Starvation is prevented by allowing 2% of lower priority queries for each higher priority queue. So, even when backfilling is performed full speed at 15 million points per second, user queries are satisfied up to 300k points per second.

Internally all caches are partitioned to allow parallelism up to the number of cores the system has available. On busy parents with a lot of data and capable hardware it is now easy for Netdata to respond to queries using 10 million points per second.

At the same time, extent deduplication has been added, to prevent the unnecessary loading and uncompression of an extent multiple times in a short time. This works like this: while a request to load an extent is in flight, and up to the time the actual extent has been loaded and uncompressed in memory, more requests to extract data from it can be added to the same in flight request! Since dbengine trying to keep metrics of the same charts to the same extent, combined with the feature we added to prepare ahead multiple queries, this extent deduplication now provides hit of above 50% for normal chart and context queries!

Metrics registry

A new metrics registry has been added that maintains an index of all metrics in the database, for all tiers combined.

Initialization is the metrics registry is fully multithreaded utilizing all the resources available on busy parents, improving start-up times significantly.

This metrics registry is now the only memory requirement related to retention. It keeps in memory the first and the last timestamps, along with a few more metadata, of all the metrics for which retention is available on disk. The metrics registry needs about 150 bytes per metric.

Streaming

The biggest change in streaming is that the parent agents now inherit the clock of their children, for their data. So, all timestamps about collected metrics reflect the timestamps on the children that collected them. If a child clock is ahead of the parent clock, the parent will still accept collected points for the child, and it will process them and save them, but on parent node restart the parent will refuse to load future data about a child database. This has been done in such a way that if the clock of the child is fixed (by adjusting it backwards), after a parent restart the child will be able to push fresh metrics to the parent again.

Related to the memory footprint of the agent, streaming buffers were ballooning up to the configured size and remained like that for the lifetime of the agent. Now the streaming buffers are increased to satisfy the demand, but then they are again decreased to a minimum size. On busy parents this has a significant impact on the overall memory footprint of the agent (10MB buffer per node x 200 child nodes on this parent, is 2GB - now they return to a few KB per node).

Active-Active parent clusters are now more reliable by detecting stale child connections and disconnecting them.

Several child to parent connection issues have been solved.

Replication

Replication now uses the new features of dbengine and pipelines queries preparation and metric data loading, improving drastically its performance. At the same time, the replication step is now automatically adjusted to the page size of dbengine, allowing replication to use the data are already loaded by dbengine and saving resources at the next iteration.

A single replication thread can now push metrics at a rate of above 1 million points / second on capable hardware.

Solved an issue with replication, where if the replicated time-frame had a gap at the beginning of the replicated period, then no replication was performed for that chart. Now replication skips the gap and continues replicating all the points available.

Replication does not replicate now empty points. The new dbengine has controls in place to insert gaps into the database which metrics are missing. Utilizing this feature, we have now stopped replicating empty points, saving bandwidth and processing time.

Replication was increasing the streaming buffers above the configured ones, when big replication messages had to fit in it. Now, instead of increasing the streaming buffers, we interrupt the replication query at a point that the buffer will be sufficient to accept the message. When queries are interrupted like this, the remaining query is then repeated until all of it executed.

Replication and data collection are now synchronized atomically at the sending side, to ensure that the parent will not have gaps at the point the replication ends and streaming starts.

Replication had discrepancies when the db mode was not dbengine. To solve these discrepancies, combined with the storage layer API changes introduced by the new dbengine, we had to rewrite them to be compliant. Replication can now function properly, without gaps at the parents, even when the child has db mode alloc, ram, save or map.

Netdata startup and shutdown

Several improvements have been performed to speed up agent startup and shutdown. Combined with the new dbengine, now Netdata starts instantly on single node installations and uses just a fraction of the time that was needed by the previous stable version, even on very busy parents with huge databases (hundreds of GB).